The AI Trade Isn't Over

Why I continue to buy AsteraLabs and why efficiency will be the next source of returns.

This is going to be a long write up to detail why I believe there is more to come in the AI trade and why I remain positive despites the market negativism, even medium term.

Here’s today’s roadmap.

The AI bear thesis, with the systemic risks and actual concerns.

Oracle’s aggressive gamble & capEx, and its concentration risk.

The counter-data: Broadcom’s massive quarter.

AI is a real and transformative technology and its actual limitations.

AsteraLabs, the company resolving those botlenecks.

Execution is all that matters to find back optimism.

This is the kind of content I intend to offer regularly & is why I wish to refocus myself, to offer those kind of pieces with a clear update on a specific companies and its sector and share with you real, actionable and valuable information.

The conclusion of this write up was already share on my December investing plan: AI isn’t going to disappear but its source of returns will change, from volume to specifics. The next winners are those who will bring efficiency and leverage AI into real products.

Let’s get started.

The AI Bear Thesis

The market is scared of two things:

That the actual spending doesn’t translate into cash generation fast enough to justify the current spending pace and repay borrowings.

That part of the commitments which were priced in with optimism end up not being paid for and potentially get cancelled in the next quarters or years.

The market priced in continuation with optimism and is now pricing in execution risks, with reason to some extent.

The situation today remains that demand outpaces supply, and that demand growth outpaces supply growth. Meeting this massive demand requires infrastructure, and as demand grows so fast, companies accelerate their spending to meet it in a race to win market shares. The market loved that, but now asks questions as valuations reached a point where questions should be asked.

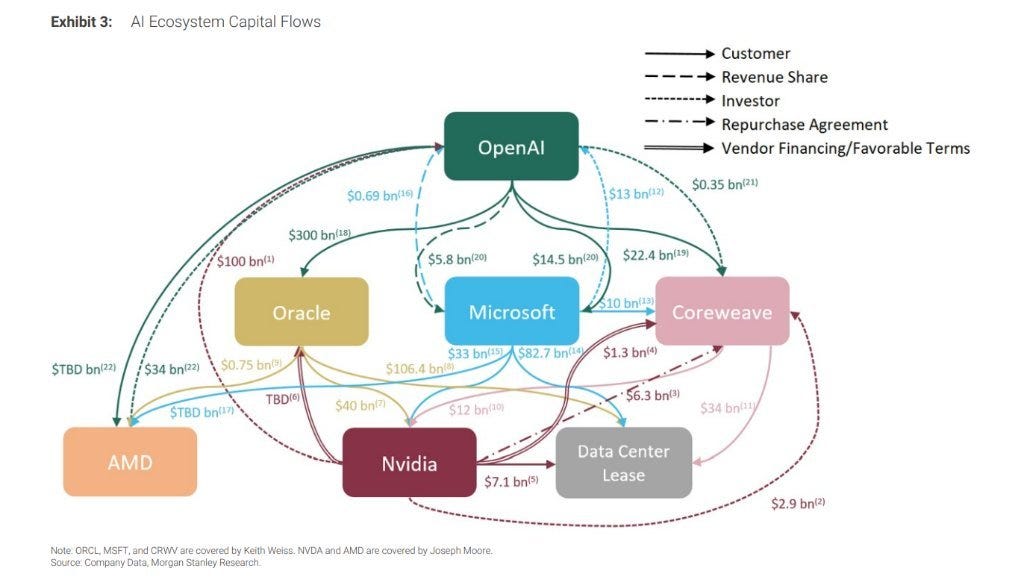

The biggest global risk comes from OpenAI and its $1.4T of commitments by 2030 on a $20B ARR end of 2025. Those aren’t only to be paid in cash; part can be paid in compute or services. But the question remains: will they be able to pay for it? Will they grow fast enough and generate enough cash to do so?

If not, then a big part of the actual demand for infra, external compute, hardware, etc., won’t materialize, and the premium given to most companies won’t be deserved as future growth will disappear.

Truth is: no one knows what will happen.

OpenAI could grow and generate enough cash when they reach enough compute to satisfy a massive demand that can continue to grow steadily for decades. An IPO can help. New partnerships. We saw Disney invest in them last week, which means some companies outside of the AI bubble start to be interested. Many possibilities. But there is also a world where they end up insolvent and their massive commitments disappear, triggering a chain reaction. The market is now pricing this systemic risk.

If OpenAI fails, the entire market follows.

And while the biggest risk does come from OpenAI, the skepticism expanded to the entire sector and to companies without any ties to OpenAI. All companies involved in AI compute are tied to the potential overbuilding of capacities. It is just less systemic than OpenAI. If Meta overbuilds, their problem will be having spent too much of their cash and potentially having to pay some debt, but they’ll still have a profitable core business behind them and be able to pay for their commitments.

If OpenAI fails, commitments disappear - for them but also for all the companies they promised purchases to. This is what the market is most afraid of, although it certainly won’t be happy if Meta spends money for no returns.

Company Specific Risks

The market is now pretty pessimistic, and even if nothing backs up failure in terms of raw data, the market is looking for proof everywhere - or any other issues within the industry.

We talked about Nvidia’s inventory and payment delays few weeks ago. The market interprets the data as Nvidia manufacturing GPUs without demand, meaning either some customers cancelled or didn’t pay for them. I believe it is due to longer delays due to more complex hardware than before - packaged and customized.

Another with Meta adopting Google’s TPUs. The market interpreted this as Nvidia’s hardware wouldn’t be the best anymore, which spiked fears. The truth is, Meta needs so much compute, it goes to get it everywhere it can.

There surely are more, but I won’t got through them all as I want to talk about the current actuality: Oracle & Broadcom.

Huang’s Law

Before that, a few words on compute’s progress as an introduction for later subjects.

We had Moore’s law in 1965, stating that the amount of transistors we could set up within a chip of comparable dimension would double every two years, and therefore double total compute per unit of space during the same time. This is a brief overview of Moore’s law, a prediction which ended up being pretty accurate for decades, until recently.

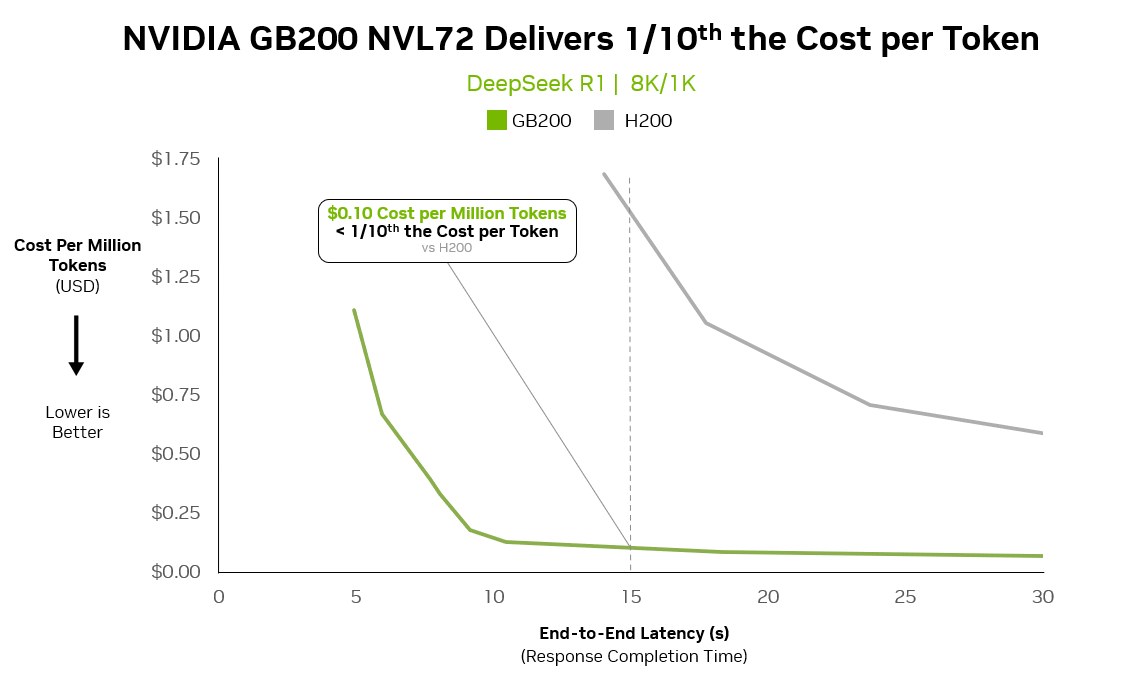

Moore’s law was about physical improvements, but what some call Huang’s law, from Nvidia’s CEO, focuses on raw compute capacity, which is also expected to double every two years - or even faster, for AI workloads. This is done not only through more transistors or better hardware but the upgrade of the entire AI stack: hardware, yes, but also software and networking.

And it has been true so far, with the latest Nvidia’s hardware delivering 1/10th of the cost per token compared to its previous generation.

Cost per token is the better unit nowadays and can be translated as compute per unit of energy, which what the cost really is: energy consumption. And this brings me to two key points for this write-up.

This can be seen as bearish for compute providers, as it means in the future the same hardware stack will be able to deliver better performance while consuming less space and energy. Meaning actual datacenters could potentially store enough hardware with enough energy to power future compute needs.

It isn’t the case today, but it could be tomorrow, and that potentially means actual CapEx might be overblown.

I think this is a bit stupid as it means we should ignore today’s demand and wait for better stack - which can hardly be developed without iterations, to only size properly future needs - which can’t be done. But this was shared left & right so I wanted to talk about it.

This is bullish for the last portion of this write-up about AsteraLabs. The key to this improved hardware and efficiency passes through improved components and faster interconnections, which is AsteraLabs’ business. Compute per unit of energy is what every provider is looking to optimize, so demand for those hardware components are/will be through the roof.

Oracle’s Gamble

As detailed above, what matters nowadays are the risks. And when it comes to Oracle last quarter, the risks were present.

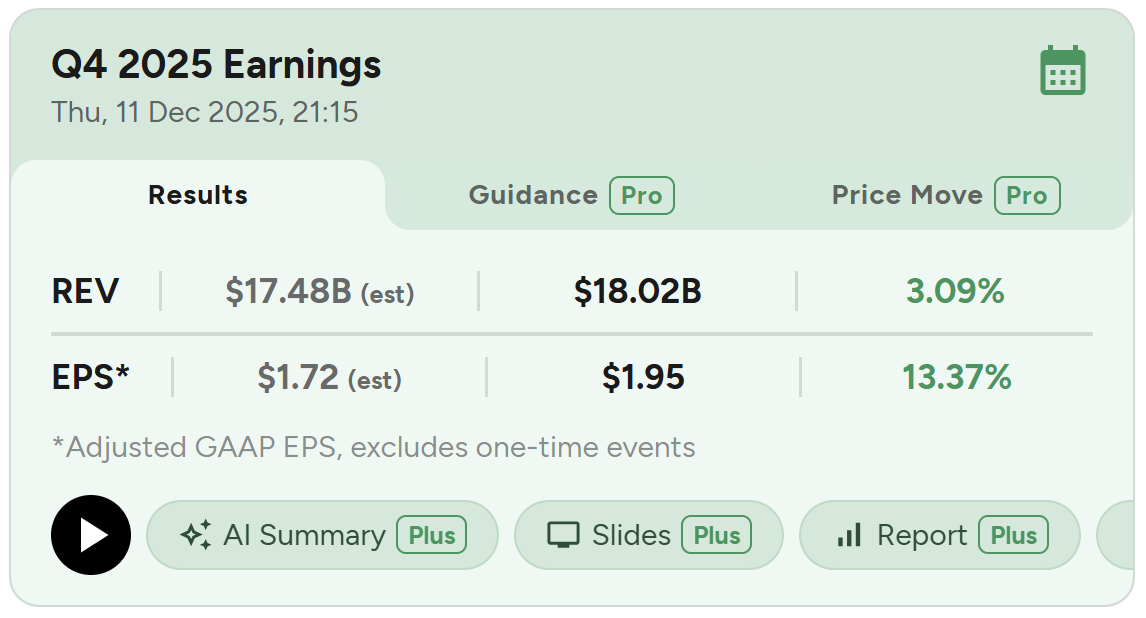

The first was minor: Oracle missed on analysts’ revenues, reporting $16.1B compared to $16.2B expected, a 0.62% miss. This wasn’t a big deal, but it didn’t help, as the conclusion was either that their compute pricing is low, or that they didn’t deliver what they were supposed to.

The main issue was the CapEx guidance raise from $30B to $50B. This increase just before year-end was the biggest catalyst. The bear narrative is crystal clear from here: overspending and execution risk, amplified by an articles about Oracle’s profitability a few weeks ago - which was factually wrong, and many more since.

Why such aggressive CapEx?

To answer a massive and rapidly growing demand as soon as possible.

To gain market share and secure a leading position in AI compute demand.

Because AI is the future and it is impossible to deny this from a business perspective.

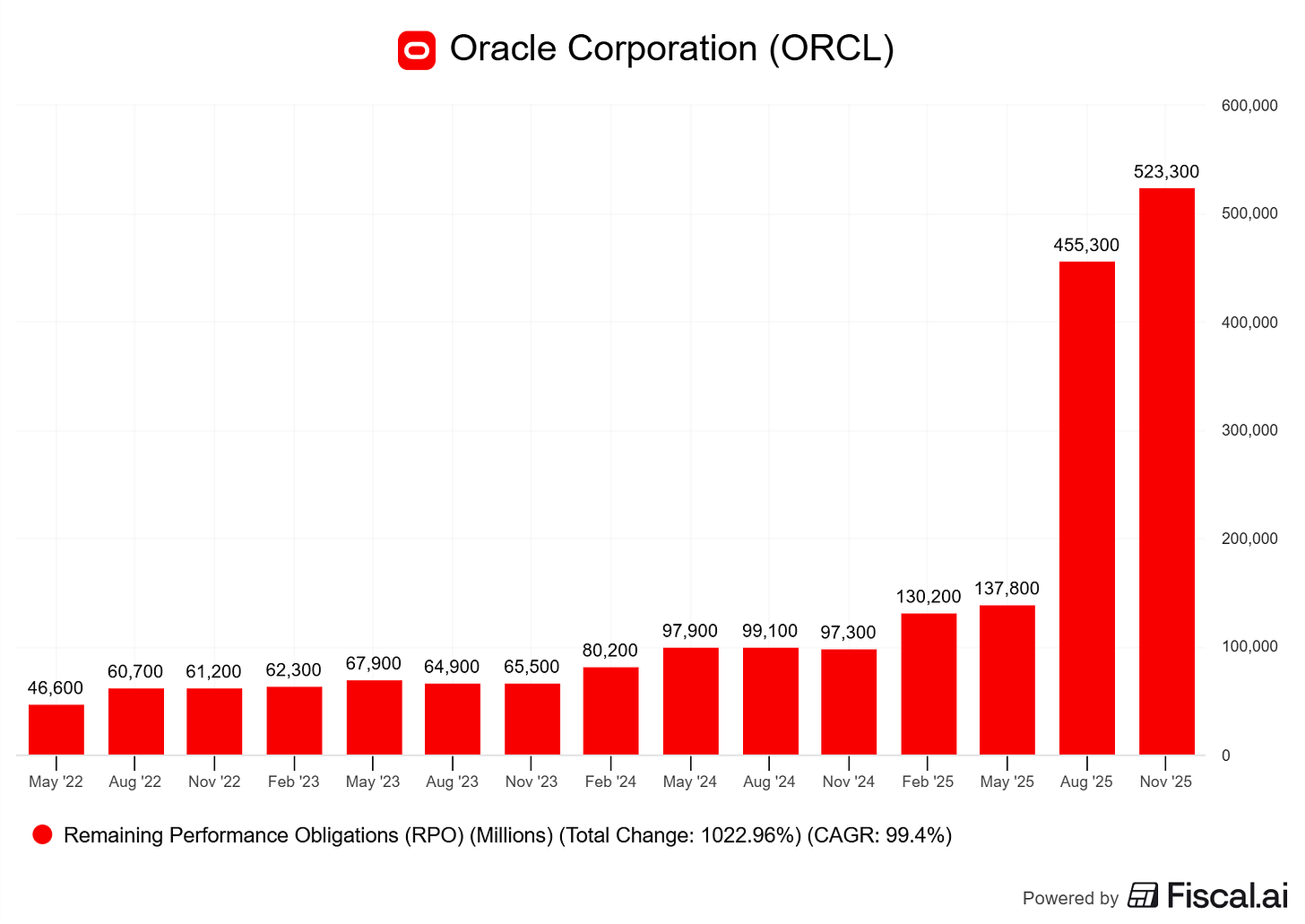

Remaining Performance Obligations increased by $68B in Q2, up 15% sequentially to $523B highlighted by new commitments from Meta, NVIDIA, and others.

If you were a healthy company, generating cash, with a sticky business model on your database services, why wouldn’t you want to expand? Why say no to customers asking you - begging you really, to sell them compute because they desperately need it and cannot find it anywhere.

And so Oracle did. But providing compute comes at a cost: walls, energy, hardware… Infrastructure. All of that is great, especially as this demand comes from giants like Nvidia and Meta. But Oracle has another issue here: His biggest client’s name, for $300B of compute, starts with Open and finishes with two letters: I and A.

The market is afraid those commitments never translate into revenues. Others would be under scrutiny for comparable financial situations - as we’ll see after, but Oracle is under the spotlight more than others because of this client.

There was an article last week on Bloomberg about an OpenAI dedicated data center being delayed The reasons were lack of labor and material, but the market happily interpreted this delay as “OpenAI cannot pay, so they start to push back commitments.”

The article was crystal clear that it was an issue of logistics. Management commented later that there were no delays in their short/medium-term buildouts impacting their delivery timelines or financial results. So the delay could exist on longer projects, but it’d be immaterial for Oracle and not the representation of any financial constraints.

There have been no delays to sites required to meet our contractual commitments, and all milestones remain on track. We remain fully aligned with OpenAI and confident in our ability to execute against both our contractual commitments and future expansion plans.

But as usual lately, what matters is the narrative, which remains that OpenAI cannot be trusted & that relying on them for future revenues will end up in broken promises and empty datacenters, resulting in wasted CapEx and struggling financial positions. Once the article was out, the fire was set.

Am I certain this won’t be the truth? No. But I believe we’re very far from it as of today.

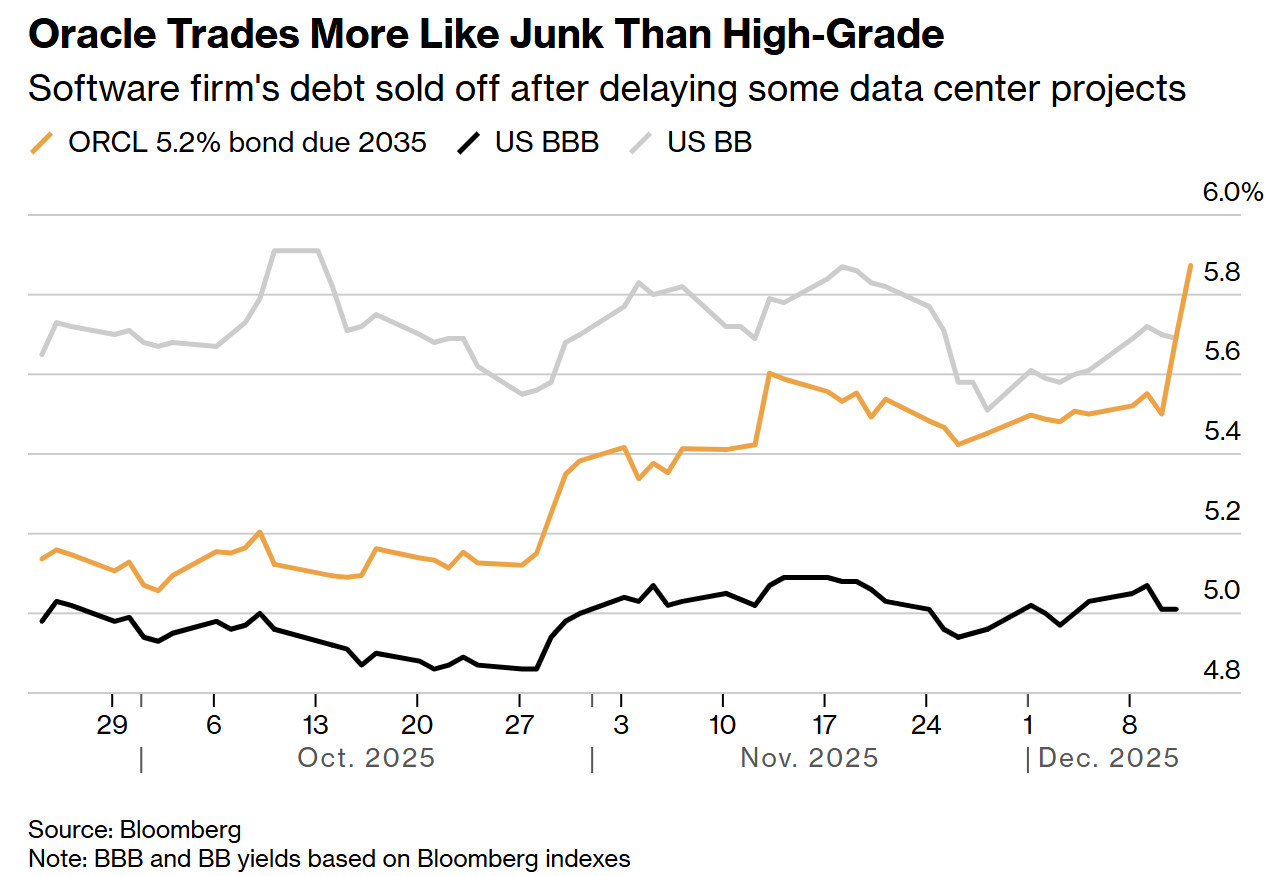

And negativity starts to show in the markets, not only the stock market but all markets as the trust in Oracle and comparable companies is fading.

Oracle bonds - source of financing which Oracle repays with a yield, started to raise after the new CapEx guidance, and has been rising even before that, as lenders need more guarantees to risk their cash on Oracle.

Credit Default Swaps have also risen, which means investors are protecting themselves against a potential default. The street is really worried of Oracle’s situation.

Lenders hedging does not mean a crash will come - correlation isn’t causation. This paragraph is meant to detail the actual situation with clear data. The concerns are real, and they materialize in the markets. Lenders are prudent.

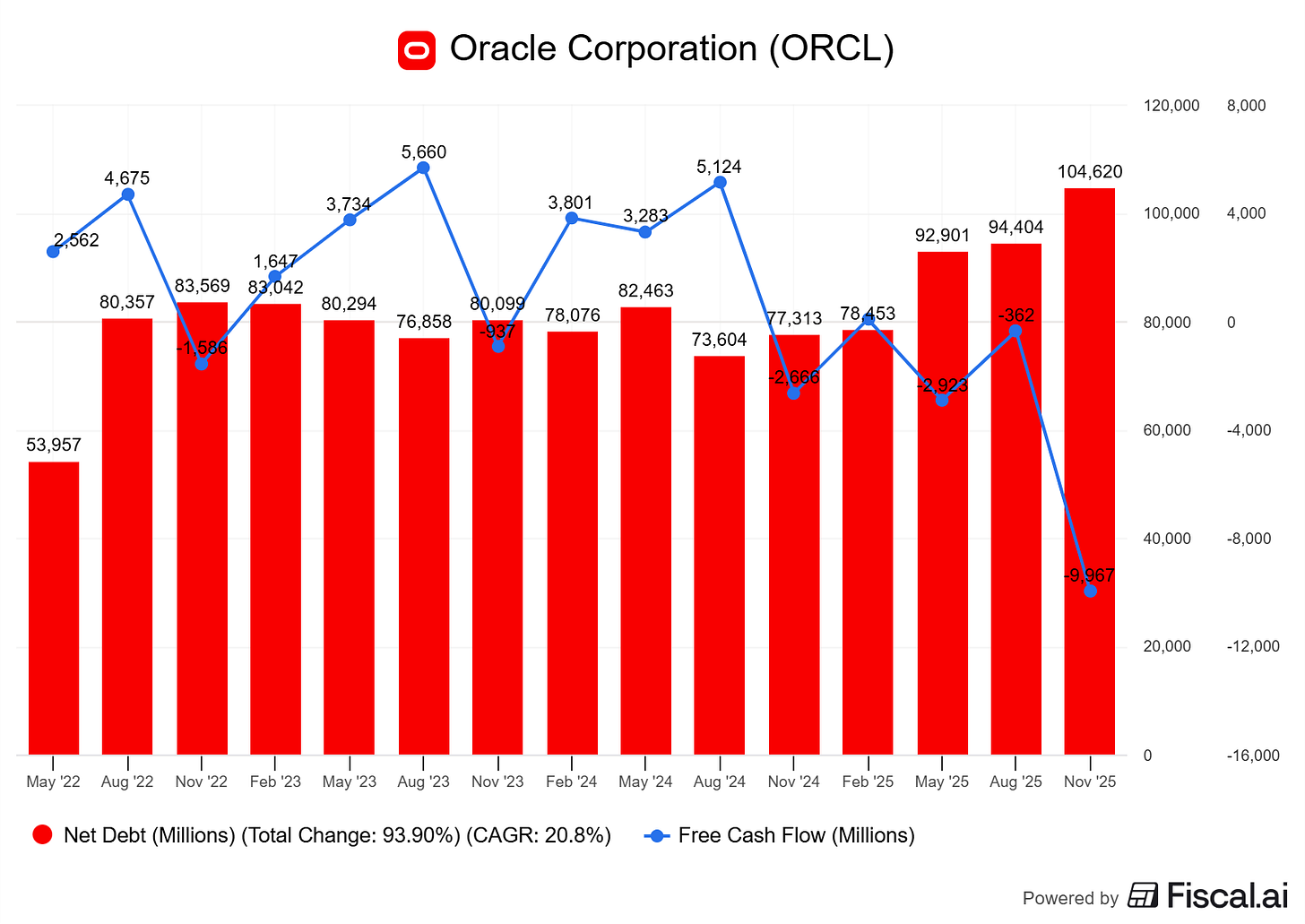

With reason, as Oracle’s aggression translates into a $104B net debt with negative Free Cash Flow and the expectation to raise even more debt short term. It is normal that lenders ask for more yield in this kind of situation as risk is real.

Management tried to calm lenders.

We are committed to maintaining our investment-grade debt rating... we expect to borrow substantially less than most people are modeling.

But the question remains: Will Oracle’s future FCF be large enough to repay its growing debt? This is the worry as while investments increases, Oracle’s financial pressure also increases with less time left to generate cash to repay this debt. And that is excluding OpenAI’s risk.

This is why lenders ask for more yield: because the risk is growing, factually.

Does that mean the risk will materialize? Not necessarily.

Let’s keep in mind that Oracle is a very healthy company, with a business model based on massive switching costs - databases, and extremely profitable.

But Oracle’s management is setting up an aggressive gamble. Two actually.

That their new AI compute services will generate massive cash flow before their debt commitments arrive, allowing them to repay this debt or to comfort the markets in their capacity to repay it.

That OpenAI will come through and succeed, generating enough cash to pay for their commitments to purchase Oracle’s compute.

From the market’s perspective, yes, the demand for compute is massive and growing, the margins seem reasonable and the potential is here. But until there are clear signs, data even, that it will work, or more optimism that it can work, it is a better safe than sorry situation.

Yet, there are many positives left in this sector.

Broadcom Massive Quarter

Broadcom, a key competitor to Nvidia and main manufacturer of AI compute ASICs, has had a different kind of quarter with important data for AsteraLabs. This is where we’ll see that the AI sector is large as if the market is worried about Oracle’s financial position, some other AI stocks do not have such problems.

For a brief explanation on ASICs and why they differ from Nvidia’s GPUs.

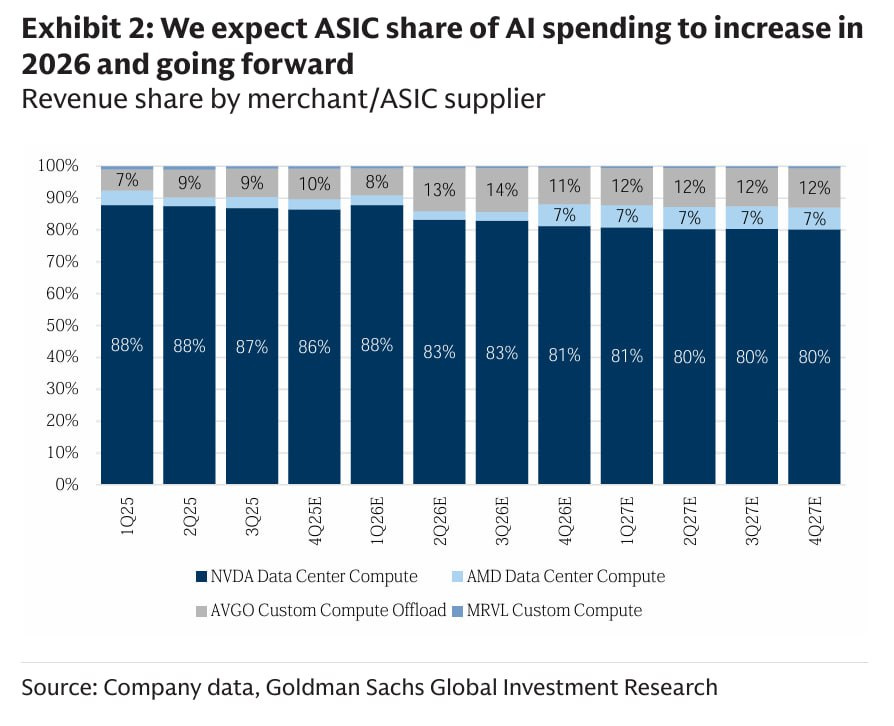

ASICs (Application-Specific Integrated Circuits) are custom chips designed for a fixed function. Bitcoin is mined on ASICs, hardware built & optimized to resolve complex mathematical problem rapidly. The same is done for specific workloads, where ASICs can outperform as long as they are optimized for such task. Google’s TPUs are ASICs and outperform Nvidia’s GPUs in specific context.

GPUs (General-Purpose Graphics Processing Units), which Nvidia made its core business, are designed for parallel computation but are general-purpose, not custom-specific, which explains the massive demand early in the AI revolution.

GPUs and ASICs can co-exist. It’s not an issue to have GPUs for some loads & ASICs for others. In a perfect world, we would have ASICs everywhere. The problem is the communication between those is slowed down by specificities, so companies usually accept slightly lower performance on some tasks for an overall better performance. The key advantage Nvidia has over ASICs is CUDA which creates versatility and allows GPUs handle almost any AI workload efficiently, unlike single-purpose ASICs. But some use case can require both to run together.

Broadcom’s business is to help companies design and manufacture ASICs, based on clear specifications. They work on the entire hardware to deliver a final version ready to compute, meaning the switches and other optimizing hardware.

This is the difference between GPUs and ASIC, and that’s what Broadcom does. They manufacture many companies’ ASICs, including Google’s TPUs, OpenAI’s custom chips passing by Meta, Apple as announced this week, and many others. The reason those companies work with Broadcom and others to build their own chips is twofolds.

Unlock more efficient compute for very specific workloads.

Avoid paying the Nvidia premium for all their compute. At 55% net margins due to a quasi monopole on high-end GPUs, the long term investment on in-house chips will rapidly be amortized.

Back to Broadcom’s quarter, it was simple: everything was a beat. Beat on revenues, on profits, guidance, etc...

Broadcom is a great representation of the actual demand for AI in general.

While Oracle is seen as a risk due to massive CapEx & risk execution, semi companies are taking in cash with much less risks - outside of the systemic one of commitments cancelled or not paid; the world needs chips, they simply manufacture and sell them, and take the cash in directly.

And Broadcom confirmed that demand is through the roof because companies need compute, and demand is massive - as we’ve already talked about.

Looking beyond what we’re just reporting this quarter, with robust demand from AI, bookings were extremely strong, and our current consolidated backlog for the company hit a record of $110B.

Demand for custom AI accelerators from our three customers continued to grow, as each of them journeys at their own pace towards compute self-sufficiency. Progressively, we continue to gain share with these customers.

A $110B backlog is a 50% revenue increase from FY25. But there was an even more important message here, directly relevant to the AsteraLabs.

The network is the computer, and our customers are facing challenges as they scale to clusters beyond 100,000 compute nodes... We know the biggest challenge to deploying larger clusters of compute for generative AI will be in networking.

They confirmed that the next big challenge is to scale-up. It is the next bottleneck companies will need to address to push compute further: connecting those GPUs or ASICs together with the lowest latency possible, transmitting the maximum data possible at the fastest speed possible.

Guess who’s business is just to do that? Broadcom, yes! But there is another one who is building a product just like this, compatible with Nvidia’s GPUs.

AI is Real & AsteraLabs Will Power It

We haven’t been the most bullish so far as I am not here to confirm your bias, but to paint honest pictures of the situations we are in.

There are real risks in the AI sector today.

But there are also many reasons to be optimistic, and many reasons to invest in a few names that are positioned to capitalize on a cash-generating opportunity, far from the CapEx risks and companies who have already delivered their potential.

A Transformative Technology.

I have said throughout this write-up many times; demand for AI compute is through the roof, and that is because AI is real. It is a real product with real use cases and a real potential for monetization through different forms: efficiency, automation, final products, et cetera...

Many companies already have AI. We can think about Meta’s improvement in the advertising world - through targeting, conversion and creativity. We can also think about their smart glasses, which are sold out almost constantly and bring tons of value to their customers. Those are tangible, cash-generating improvements made thanks to AI.

We can also think about real-world advances, notably with Google & Tesla self-driving systems. If this isn’t a real case of AI impacting the world...

And of course, there are the less visible ways AI impacts the world: the thousands of hours saved by optimization and automation tools - like UIPath software or simple LLM usage, or one of the most impressive of all: Palantir. If you believe AI isn’t real & has no use case in 2025, I’m not sure what I can say to change your opinion by now... The facts are here for those who want to see.

And demand for it has been accelerating because companies see what is possible and want to be part of it, by creating new products or leveraging the technology to improve already existing ones - Duolingo comes to mind here.

This is why we have so much demand: because every company on Earth is concerned by AI, as it is applicable to so many verticals, as long as tech is involved - and tech is involved everywhere nowadays.

The proofs are once again everywhere to be seen for those who want to see. From Nvidia’s quarterly results and backlog to Nebius refusing contracts because they do not have the capacity, passing by the many providers who accept to take the risk to overbuild because it is better for them to waste money than to miss the train.

As Mark Zuckerberg put it.

If we end up misspending a couple of hundred billion dollars, I think that that is going to be very unfortunate, obviously. But what I’d say is I actually think the risk is higher on the other side.

Today, data largely shows that demand outstrips supply, and that this situation will persist for a long time, as the backlog of AI compute manufacturing companies are full for almost all of 2026 already and the entire worlds turn itself to AI.

Expansion Bottleneck

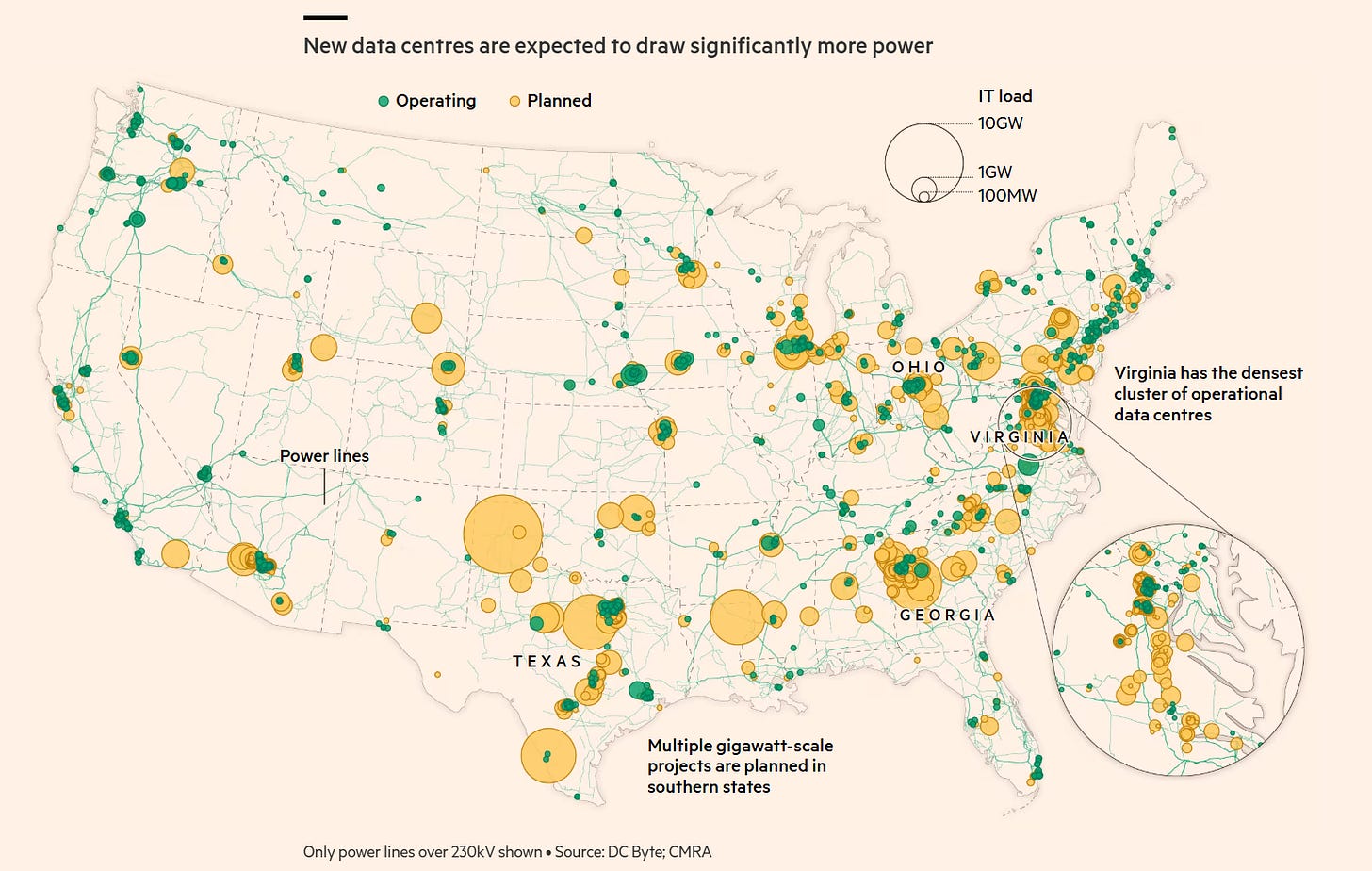

Yes, we don’t have enough compute, but the real bottleneck isn’t “compute” itself; it is the lack of infrastructure and energy sources. Compute means nothing, it is created by plugging hardware to energy sources within data centers & running data through them to achieve results. This is what “compute” means, and not having enough means the lack of many components to generate it (data centers, energy sources, electrical infrastructures, hardware, human labor, etc…). Creating “compute” requires many steps which are long to set up - months to years.

We have two ways to fix this lack of compute.

Constantly building more infrastructure, find more energy sources and plug more hardware within the newly built datacenters. Long and costly, but unavoidable.

Develop a better compute stack, generating more compute per unit of space and energy hence allowing companies to propose more compute using the same infrastructures.

As you guessed & saw with Oracle and my pitch on Huang’s law earlier: we need both at the same time to answer the actual massive and growing demand. But the second solution is more important to achieve than the first as human can be as innovative as they want, they cannot change the laws of physics & energy cannot be found from trees. Our sources all rely on nature at one point or another & building them is much longer than building hangars.

Nature has limits to what it can provide: this is the limit total compute is facing, and this is why there is only one way forward: more efficient compute.

This is where the next opportunity of the AI ecosystem lies, not in the volume - which has been the focus of the market for the last three years, but in the specifics. There is a handful of companies capable of providing the next step of optimization, and those are the ones who will power the next AI efficiency surge.

And like Broadcom management shared on their call, the next step for optimization is scale-up: interconnecting compute hardware efficiently to share information as fast as possible - GPUs CPUs memory & more, delivering more compute per unit of energy & space.

AsteraLabs

I’ve already talked about the company, its business & products, so if you’re unaware of the potential - the why and how AsteraLabs helps AI companies scale up and the flywheel effect of its products, you should click here and check it out.

The company sells semiconductors focused on resolving bottlenecks & are positioned in the “safe” part of the AI ecosystem. They still face the OpenAI and CapEx slowdown systemic risks, but are still quite small and don’t need hundreds of billions in revenue to deliver strong growth. Given that their products will power the next round of AI optimization, lots of liquidity should flow their way even with reduced CapEx.

Once again: AI is here to stay. The signs point to more compute demand in the future, and since infrastructure and energy sources don’t grow on trees, improving efficiency is the absolute priority for many quarters and years to come.

From a market point of view, it would be an exaggeration to call AsteraLabs cheap - it isn’t. It’s a great stock, properly priced for stable execution. The stock trades at 33.5x sales at the time of writing, but is expected to grow over 50% next year so less than 20x F.P/S. Important to say its key scale-up hardware are expected to ramp up early 2026, which makes future revenues estimates hard to anticipate as they could also accelerate due to those products and the flywheel.

AsteraLabs isn’t cheap. But it isn’t priced for accelerating growth which could be the real catalyst. It is priced for healthy continuation, not acceleration.

There are risks at today’s price, even if prospects look more positive than they did a few months ago. The potential needs to be confirmed by orders and volume in early 2026, which seems to be the case as management is engaged with most compute providers to meet their demand. But we’re still waiting to see how that materializes in revenue growth.

We also have proof of global demand from peer results: Broadcom - as seen earlier, Marvell, and last week Credo, which beat all expectations on its quarterly results in December 1st. All these companies, while not selling the exact same products, focus on providing optimized compute. AI cannot go further without them.

AsteraLabs specifically focuses on plug-and-play solutions for Nvidia’s GPUs, which hold about 80% of the market. Even if that market share slows slightly, the total cake is growing rapidly, meaning they process more volume.

Nvidia talked about a $500B backlog for its GPUs in the U.S. alone up to FY26. All of those can be optimized with AsteraLabs hardware.

The company’s products are needed, its market is massive, and it has already realized very strong growth before its key scale-up products have fully ramped. It isn’t overly optimistic to expect growth to stabilize and accelerate once those products ramp up, assuming demand doesn’t crash due to systemic risks.

In terms of price action, the market has been violent lately, selling off many smaller-cap stocks out of fear that large commitments will disappear. However, AsteraLabs is holding above its weekly 50 and is ranging near its earlier ATH. Hopefully, the ATH will continue to act as support, because breaking lower could open the door to lots of pain, for longer. And that’s a tough spot to be in when holding a stock.

I still consider today’s price a great one and a great buy, but the stock needs to hold, the market needs to find some optimism again. I will probably not hold AsteraLabs if we fall below the weekly 50. The risk of another 30% drop based on pure negativism is too high, and the stock is about 15% of my portfolio today. Closing a falling position doesn’t make one less of an investor, preserving capital remains Rule #1. Personal convictions are only opinions and do not make money unless the market agrees with them, so I try to leave my ego aside when investing.

But as of today, I don’t see much problem with holding or buying/accumulating. We’ll adapt if the price moves lower, but we’re not there yet.

Finally, a quick word on optics. This has been a big narrative before Oracle’s quarter & the debacle that followed, with many green candles printed on companies working on optical connectivity. For now, this is a fantasy, we do not have optic connectors within the hardware - they are only between racks. Copper is king and will be for some time as it will take years of R&D to get optic connectivity in such small environments. The gap in data transaction speed will be massive when the technology is ready, but we are still far from it.

While the market focused on small, pre-revenue optics companies, AsteraLabs was completely ignored while they acquired an optics-focused company last quarter to help on this next optimization. They aren’t late. They were early in resolving the actual AI bottlenecks - scale-up, and will be early again in proposing optics when it becomes viable. This ensures their next source of growth post-scale-up, post-copper.

Conclusion

After quarters or even years of overperformance and optimism, the market is finally pricing in execution and financial risks. This is a logical repricing that had to happen, even if the risks don’t materialize in AI demand, which continues to be global, strong and growing rapidly - evidenced by all earnings reports & the many companies now turning to AI services.

But the actual aggressive expansion and leverage from a few companies, coupled with the global fear around OpenAI, creates financial tensions and a potential systemic risk that markets logically dislike.

(Rapid parenthesis: This is why I chose to invest in Nebius. Its financial situation is one of the healthiest on the market, relying on convertible notes only, no private debt, and a large equity fund to support growth, while management has proven very reasonable in its expansion strategy).

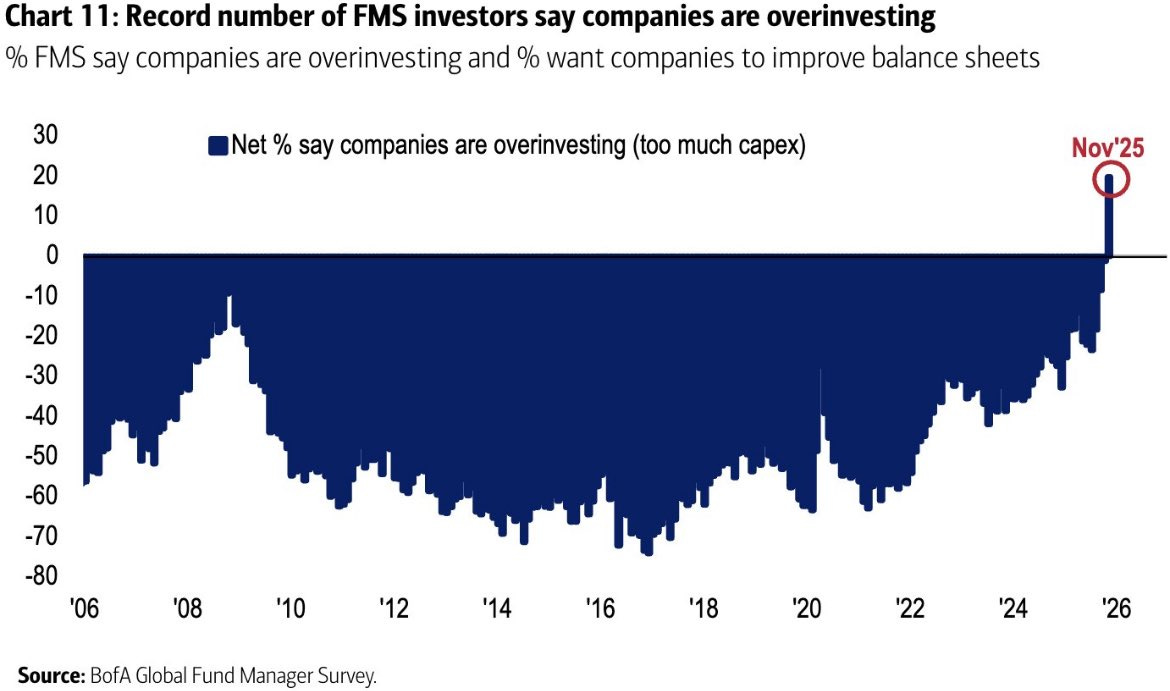

Today, for the first time since ever, the majority of analysts believe the spending is going too far, which explains the global pessimism.

But once again: AI demand is through the roof.

The necessary next step is optimized, more efficient compute, to help providers deliver more with less. This grows revenues and margins by reducing costs & will reassure the markets about the financial soundness of their expansion.

This next step requires scaling up AI infrastructure. The companies providing this hardware - like ALAB, are not impacted by the CapEx risks that Oracle and others suffer from. Their business is simply manufacturing hardware, selling it at high margins and generating cash right away.

Maximizing compute per unit of space & energy is core for any provider’s profitability. Demand for the hardware capable of doing so will grow because providers have no choice but to buy it. They are in a race against their own leverage & need efficiency to generate cash as fast as possible.

Yet, given the strong adoption, massive backlogs, and the non-negotiable need for efficiency to fix the infrastructure/energy bottleneck, the market remains pessimist and the path back to optimism isn’t easy from here.

Execution is key. The market wants to see stable margins and cash generation - financial proof that commitments will be met and that the actual leverage isn’t as dangerous as it thinks. This means strong quarterly results and therefore, no rapid turnaround.

A positivity boost could come from Nvidia being authorized to sell in China again. The region was estimated to be a $50B market this year, growing rapidly, which would wave many concerns around Nvidia’s growth and optimism in the leader could spread.

Favorable rulings on tariffs could also boost global market sentiment, just like possible stimuli or taxes reductions, but that wouldn’t be AI sector specific.

When it comes to the AI trade the only way to move back to optimism is execution. And that will require time, while risk remains real.

I hope this write-up gave you some clarity on the situation, the challenges, and the potential left in many names in the AI trade, despite the actual pessimism.

I believe we have more to see in the coming months and remain invested/buying the names I believe will be impacted by the next leg, but this leg could take time to materialize. And yes, it comes with real financial risks, which have already materialized.

There are reasons to be cautious, but also many to be optimistic while the market is giving good prices to those who believe the AI trade will continue short & medium term - the long-term potential is not in question. When it comes to hardware, this means AsteraLabs and Nebius for me, while the software stacks also has many interesting names - UIPath coming to mind.

I continue to follow my investing strategy shared few weeks ago.

Everything is about risk tolerance from here. I personally am in and buying. I will be cautious if price action continues to deteriorate, as no matter how optimistic I am, capital preservation remains Rule #1.

Until then, efficient compute is key and only a handful companies can deliver it. And I want to bet on them.

Fantastic write up! Thank you 🙏🏾