Nvidia | Investment Thesis

The one who computes.

Intel CEO did a lot of noise over the last years calling Nvidia & Jensen, its CEO, “lucky”. His narrative is that they just did their job & were there with the right product at the right time, nothing else. Although to do him right, he also specified that Nvidia created its own luck, not attributing their entire success to chance.

I am not a big believer in luck myself & I don’t believe it even matters. What matters is what Nvidia became & is: the sole provider of what is now the most critical hardware for the tech industry. A hardware capable of seeing the future according to its CEO.

And that’s what we’ll talk about today.

Nvidia.

Let’s start with a rapid history on Nvidia is: the 3D graphic-focused company who invented the Graphics Processing Units - GPUs.

CPUs & GPUs.

We’ll need to be technical right away as after all, we are talking about hardware & one of the most advanced tech companies of the world. Can’t avoid it.

What are they?

Central Processing Units & Graphics Processing Units are both chips, hardware, pieces of tech integrated in our daily equipment that work together to give us the best experience possible.

Here are what they look like - at least a version of them. GPU right, CPU left.

Not looking so different right? Yet, they are. Although they both answer the same fundamental need: Take input, execute instructions & produce output.

As I type on my keyboard right now, my CPU is capable of understanding that I need to have the letters I tap on written on my webpage, and to display the result on my screen. Each & every software that we use is somehow being processed by our CPU, continuously, including the functioning of our operating system & other base tasks. Take input, execute & produce output. Repeat.

Both CPUs & GPUs are composed of what we call “cores”, which simply put are semiconductors treating their attributed tasks. A CPU or GPU is nothing but a multitude of cores. This is where the difference between them is made.

CPUs were the first computing power units built & were optimized for latency (speed per task), not volume, composed of a few powerful cores. They are good at treating complex instructions sequentially but not thousands simultaneously - they can do it but will struggle. This is why a focus was made to develop new hardware capable of treating volume, not complexity, composed of many more cores.

Here’s a very visual which showcases the difference between the CPU’s sequential computing with GPU’s parallel computing.

CPUs were enough at the early time of computers as most used complex software which required sequential computing without much need for parallel computing, as no focus was made on 3D or graphism at large. Computers were slow & meant to treat information, nothing else. CPUs were enough.

But as you know, things changed.

The World’s First GPU.

Enters Nvidia, a company founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem, three engineers with IT hardware background at different companies.

They saw back then the slow shift from simple software, displaying raw information & only meant to compute towards more beautiful applications with graphical interface & more usage - i.e gaming. They rapidly understood that CPUs, the only computing power chips available at the time, wouldn’t be enough to sustain this revolution from text-based/practical to interactive/3D.

So they worked to develop another piece of hardware capable of treating a multitude of smaller tasks simultaneously, hardware capable of treating volume, freeing the CPUs’ computing power to focus on the complex tasks. And that is how, in 1999, Nvidia introduced the GeForce 256, which they called the "world’s first GPU."

They kept going from here.

Early Usage of GPUs.

The company focused on the video game industry at first. Their GPUs were the only piece of hardware capable of computing tons of simple information rapidly, making it the go-to hardware for this already major industry, which kept growing to become massive thanks to GPUs which gave new possibilities to editors.

They allowed computers to finally have enough computing power to display complex graphics, 3D movements etc... GPUs impacted many more sectors through the years, most of them being linked to the 3D sector - imaging, animation etc…

We couldn’t have today’s movies with only CPUs, or to be correct we could have but we would need massive infrastructures, way too expensive to be used or even made either way. GPUs made everything cheaper, accessible & faster. And this kept on going for years as GPUs got less expensive, smaller & more powerful.

Video games, graphics in general & animation are where they are today thanks to GPUs - mostly. And I love it, ‘coz we now get beauties like this one.

But rapidly, the world understood that GPUs could do much more than just graphics. They’ve been focused on this, but after all, what they do is parallel computing and this can be applied to many sectors.

One of them was cryptocurrency mining. I explain everything you need to know about it on my Bitcoin Investment Case. Rapidly, mining is a competition to find the proper mathematical answer to a complex problem. What matters is to be the first & if your computer can test more combinations per second than others… You get a bigger chance to find the right one.

Cryptocurrencies weren’t the start of it all, but it is around 2010 or so that the world woke up to what GPUs could do, not only for animation & video games, but for everything which needed compute.

And this came with CUDA.

Compute Unified Device Architecture.

This is why I don’t believe in luck nor would call Jensen lucky. They knew what they were working toward & had the conviction that parallel processing would become something major. They simply didn’t know what, so they gave the world the opportunity to show them.

CUDA is a platform, born early 2007, shared by Nvidia to developers to program their GPUs. At a time where most were used for graphics, rendering & other 3D functions by default, CUDA gave the opportunity to the world to develop new use cases by optimizing the hardware itself.

Things changed but CUDA’s principle remained the same: an accessible platform to optimize GPU usage according to your needs. It can be compared to your own car, instead of a rental. You can do whatever you want with your own, change paintings, the motors or even transform it into a flying car. But you can’t do anything more than driving with a rental car.

This is exactly what CUDA allows developers to do: configure their own hardware as precisely as each core to behave in a certain way instead of another thanks to tons of parameters.

This changed everything for Nvidia. They had a different hardware, optimized for a different way of computing. They had their niche for years but decided one day to give the keys of their truck to millions of brains around the world, and those brains found what GPUs were really good for.

Deep Learning & Artificial Intelligence.

Few years after CUDA was widely released, the tech world continued to investigate on machine learning, neural networks & data, with the constant goal to teach machines, so they do not only do what we tell them to do through software but start to do because they understand what we need & how to do it.

I’m sure you understand the difference between asking your kid to do the dishes & explaining to him what to do at every step, from walking toward the sink to taking a plate, a sponge, putting soap on it - you get the gist, and not saying a word as he goes directly towards the sink at the end of dinner because he knows what he has to do & how to do it.

Everyone, still today, wants machines to behave that way but no one knew how to do.

Until the AlexNet neural network crushed competition at the ImageNet Large Scale Visual Recognition Challenge. The challenge is easy - at least to understand. Images are given to the model & it has to tell what the image is about.

And as I said, the AlexNet neural network crushed competition. I’m sure you can guess why but I’ll still write it: for the first time, the model was trained on Nvidia’s GPU - two NVIDIA GTX 580 to be precise, and optimized with CUDA. This combination allowed the team to feed much more images to the model, faster & for cheaper, resulting in a much better training than competition, hence better results.

From there, the details on how the model was built were published, other researchers jumped on board & GPUs started to be democratized. Slowly, the community migrated towards this hardware, much cheaper, faster & adapted to parallel computation.

The world finally found what GPUs were the best at & democratized it, all thanks to CUDA which gave the opportunity to the world to do its thing. From there, Nvidia knew what they had to focus on: Continue to develop CUDA to provide better optimization possibilities but also optimize the hardware itself.

And that is what they did.

The Start of Everything.

They built an entire ecosystem with optimized hardware for parallel computing with CUDA on top so any company could optimize their GPUs usage even further. With time, they developed hundreds more libraries for CUDA - more than 900 today, always giving more possibilities to developers.

The wheel started from there and the world woke up to it, with each & every tech company turning themselves to themfor their compute needs. Data analysis became machine learning & is now called artificial intelligence.

Nvidia’s data center branch was generating around 8% of their total revenues in 2012, before jumping to 18% in 2017, 30% in 2018, 45% in 2020 & finally 80% in 2024, with all the growth driven by constant improvement in their hardware, boosted in FY23 by the commercialization of their best in class: Hopper.

We are now talking about Blackwell & the future generations as Nvidia is everywhere, in every data center & sector as Artificial Intelligence isn’t limited in its scope and can be applied to business, math, research, medicine, robotics… Every sector will eventually need it, which means the world will run on GPUs.

On Nvidia’s GPUs.

Artificial Intelligence.

We went through Nvidia’s story but we now have to dig deeper on the main subject of this write-up & what makes Nvidia the most important player in this sector. The concept of artificial intelligence is pretty easy to grasp: a machine should be able to learn like we do & help us in a human interaction fashion, not only in a “if this, do that” fashion - which is how softwares work today.

This brings me back to my dishes example.

We’ll try to go over the important concepts here although let’s keep in mind that we won’t dive too deep on the subject, we’ll only cover the main concepts - models, tokens, taining etc… to understand how it works & why Nvidia are the best at it.

Models, Tokens, Training & Inference.

Those are probably the most important concepts to understand AI in general.

Model & Training.

We usually talk about software for any tools which allow us to do anything on our computer or any tech tool. Models are to Artificial Intelligence what software is to computers or apps are to smartphones.

A software is developed by engineers using different programming languages with a pretty clear structure meant to explain to the computer what it should do in which case. Models are trained systems which will be able to adapt to situations they were trained on. No software will tell them what to do when, a model should be able to know what it should do based on the situation & its training.

Software & models are fundamentally different & this is important to grasp.

Before having a model, it needs to be trained just like before beating Michael Phelps, we need to learn how to swim. No one will scream to us what we have to do when we are in the water, we know it because we did it thousands, millions of times.

The methodology is no different for models, except for how much faster it can learn - in theory. It goes like this: gather relevant data to what you want to train your model, prepare your training set-up, feed the model with the data & test it afterwards. Review the mistakes, correct them. Don’t rinse. Repeat.

Any format of data can be used depending on what you want your model to do. If you train it to be a customer relation service, you have to feed it everything it has to know about the potential issues it can face, any knowledge about the system it is serving but also everything about customer relations like being polite, serviceable, understandable etc…

This is where Nvidia’s GPUs & CUDA matter, this is the infrastructure that will deliver the necessary computing power in parallel to accelerate the model’s training. And there is a huge need for it as with any data fed to the model, billions of parameters will be modified to optimize it. This will be repeated multiple times until the results are good enough - which can take very long. The more compute available, the faster the model will be trained, the more accurate & more complex it will be.

If you struggle to understand it, imagine this. You want to learn Chinese but it takes too long, so you plug a computer to your brain - Nvidia’s GPUs. This computer has access to millions of videos of Chinese interactions plus a translation system - the data. For the next hours, the computer will pass multiple videos in parallel to your brain while translating the conversations for you to understand what is happening - the training. It won’t feed you the videos one by one but hundreds by hundreds or thousands by thousands as it has the computing power to do so and doing so will accelerate your training. Then, it will simulate situations where you have to answer in Chinese and correct you every time you fail so you won’t make the same mistake - parameters modification. At the end, you’ll know how to speak Chinese.

This is how models emerge & we start to see many by now, with different purposes. We can make a distinction between strong & weak models.

Weak & Strong Models.

Weak models will be focused on specific tasks, like LLMs are meant to answer to us & provide us the information we need. They don’t do much more than this - it doesn’t mean they aren’t powerful, on the contrary. They’re just specialized.

We can talk about Palantir here. Its AIP models are weak models but some of the most important & powerful tools in the business world, trained on a company’s internal data & meant to help management or anyone optimize it thanks to their deep knowledge of… everything.

“Weak models” is only a name. They are powerful & transformative.

Strong models will be much wider models which should be able to perform different tasks, like humanoid robots taking care of a house. We don’t have many of those yet - more about this on the AI potential later.

Tokens.

Those are the units that compose a model but also its usage. If we imagine a model as a finished construction to have knowledge, then we can associate the tokens to the hundreds of stones or blocks used to get there & to use the model.

Tokens can be anything: letters, words, phrases, images, audio snippets, pixels… Any kind of data segment.

Billions of them will be used to train the models until they are performant enough. And billions more will be generated when we use the models afterwards between inputs & outputs. Input will be what the model receives & has to interpret while output will be what it gives back.

Any AI works that way, with inputs from any kind of data - video, images, sounds, text, which will interpret them based on its knowledge - acquired after being fed tons of tokens during training. The model will then behave as it was taught and send back tokens in the required form, be it text, image, videos or movements & actions.

Training & Inference.

We’ve seen how models were trained: being fed tons of data in the form of tokens, simultaneously, through GPUs. This is what we call training & it requires tons of computing power to create powerful models, rapidly.

But this is only the beginning as once your model is trained, you need to use it; this is called inference & is also compute intensive. The model still needs to understand you & give an output. Even if smart, it will need compute if only to formulate an answer or action through token generation. More compute will be required depending on how complex the task is.

There is a global consensus saying that inference requires less compute than training, but I - and many others, tend to disagree with this. Most, I believe, forget to factor the massive usage of AI tools in the future & its different applications - we’ll talk about those just after. One person using an LLM isn’t compute intensive, but hundreds of millions of users will need thousands of GPUs to manage inference, and this need will grow depending on how complexe the model is.

Different Kinds of AIs.

This is where things start to get interesting as we’ll finally grasp where we are, what’s next & the immense potential of AI, keeping in mind that everything is related to Nvidia as AI = GPUs + CUDA, for both training & inference.

Those are the defined stages of AI; they do not necessarily follow each other - more like developed simultaneously. They are all kind of self-explanatory, but I’ll talk a bit about each.

Perception AI. This stage is about understanding us, our languages, words, vision… We come back to AlexNet & its model capable of classifying images. This exists since long & was called neural networks - still is, although we usually include everything in “AI” nowadays.

But this went further in the last years as now some AIs are capable of understanding what is happening around them in real time but also to understand any kind of format & not only pictures. We can talk about the Meta Smart Glasses, capable of giving you information about monuments they see through their cameras for example.

Generative AI. This is the next step. Our technology is capable of identifying things since years, but it hasn’t been able to generate much; this is still kinda new. As the name suggests, those AIs will be able to understand your words, context & create what you need in different formats - text, image, video, movements...

You can ask lots to ChatGPT or Grok, for example, or you can turn yourself to Adobe’s Firefly or MidJourney for better images as they propose weaker models - as in more specialized, while in some weeks or months we will have widely available video generators.

We can go from words to image or video & reverse & in time, not far away - it already exists but still need to be perfected, we will be able to go from words to actions as your robot will do your dishes.

We are talking about models capable of giving any kind of output depending on your need, while understanding you. From on type of tokens to a different kind of tokens.

Agentic AI. This is the next step, really new in its advanced form & we honestly don’t have many yet. Those will be able to understand & generate output but will also be capable of reasoning & decision-making, based on data & their training. The ultimate stage of agentic AI will be when those are capable of decision-making without any human supervision.

The best example so far would again be Palantir AIP, which is trained on a company’s internal data, capable of reasoning based on this data & any other external data to propose a solution to any kind of problem emitted by a user.

The decision still goes back to human but we’re close enough to what Agentic AI aims to be - AIP can also take decisions by itself if required. Those need to be capable of perception & generation as they need to acknowledge external data & produce output; the difference lies in the complex reasoning & decision-making.

Agentic AI should autonomously pursue goals, make decisions & act in a dynamic environment.

Physical AI. The next step is obviously to bring AI to our physical world, not only in data & computers but in our roads & houses.

It isn’t enough to have robots programmed to do some repetitive tasks like we have since decades in factories. We’re talking here about physical robots or however you want to call the hardware capable of perception, generation, reasoning & action. Capable of understanding the world & its physics.

As a Tesla bull, I will use here the example of FSD, an AI system capable of driving your car (almost) by itself.

We’re not 100% there yet, but we’re damn close, and this is only one application for physical AI. The training & inference for this kind of product is nuts so the need for GPUs is too - which is exactly why we have reasons to be very bullish on Nvidia & GPU consumption.

This was a good overview of AI, what it is, how it works & the different applications we have & are working on. Now we can focus a bit more on Nvidia’s bull case.

The Bull Case.

During the 1850s, gold mines were discovered in California & this triggered a massive migration from any American who wanted to make a fortune. The first ones probably did as they had it all for themselves, but as many more came with the same aspiration, less & less were capable of finding enough gold, and most ended up without much. During all that time, those who got filthy rich were not those who found the gold; they were those who sold the shovels.

This comparison was often used to talk about Nvidia over the last years, and it does make sense because this is what the company has been doing: selling shovels.

Cross Sector Industry.

This is the first thing to talk about although you probably have already understood it. Artificial Intelligence is for everyone, everywhere, and has no limits in its usage.

AI is about computation, each & every industry needs at least to process its own data to optimize itself for example - Hello Palantir, again. I could go on forever and talk about all the sectors & how AI or computation power can be applied to them, with some highlights in healthcare to find correlations for example, between patients' lifestyles & diseases, or between genomes, molecular research & more.

We can talk about Hims here with its MedMatch system made exactly for this - not the research part, the correlations part. If interested.

AI will bring massive transformations in healthcare, but in many other sectors as well, and I don’t think we should underestimate its potential, need & capacity to transform our societies to its core. To optimize it. And again, the common need for everyone in AI is computing power, hence GPUs, hence Nvidia.

Besides the sectors, I’d like to point out that in terms of geographies, only the U.S. has been strong at it so far. China is waking up but is very late in terms of infrastructures - also slowed down by restrictions we’ll talk about; Europe is focused on regulating something which doesn’t exist, while the rest of the world is not really involved.

There are no limits to where AI can go & what it can do. At least, not yet.

Hardware & Software.

As I’ve said, Nvidia has focused its business on optimizing both its GPUs & CUDA, constantly delivering better value for tech companies, more computing power for smaller, cheaper & more optimized infrastructures.

The first real leap forward in terms of hardware came with Hopper, a GPU chip with a built-around infrastructure optimized for heavy data processing in general, which includes AI training & inference. Better than what we had, but far from perfect.

Yet, it is what is installed in most AI datacenters nowadays & was, until not long ago, the best anyone could hope for. The first leap forward.

The second & real leap forward comes now with Blackwell, their new chip supposed to be 2x to 5x more performant when it comes to training & 15x to 30x more performant when it comes to inference. This is the next must-have hardware infrastructure for any AI datacenter.

Better, Blackwell chips are customizable as Nvidia works to deliver tailored solutions to its clients. The hardware is different depending on how it’ll be used. Hardware can be optimized to get exactly what you need. CUDA will then be able to customize their usage through multiple libraries for any sector, application, industry, company...

The perfect customization.

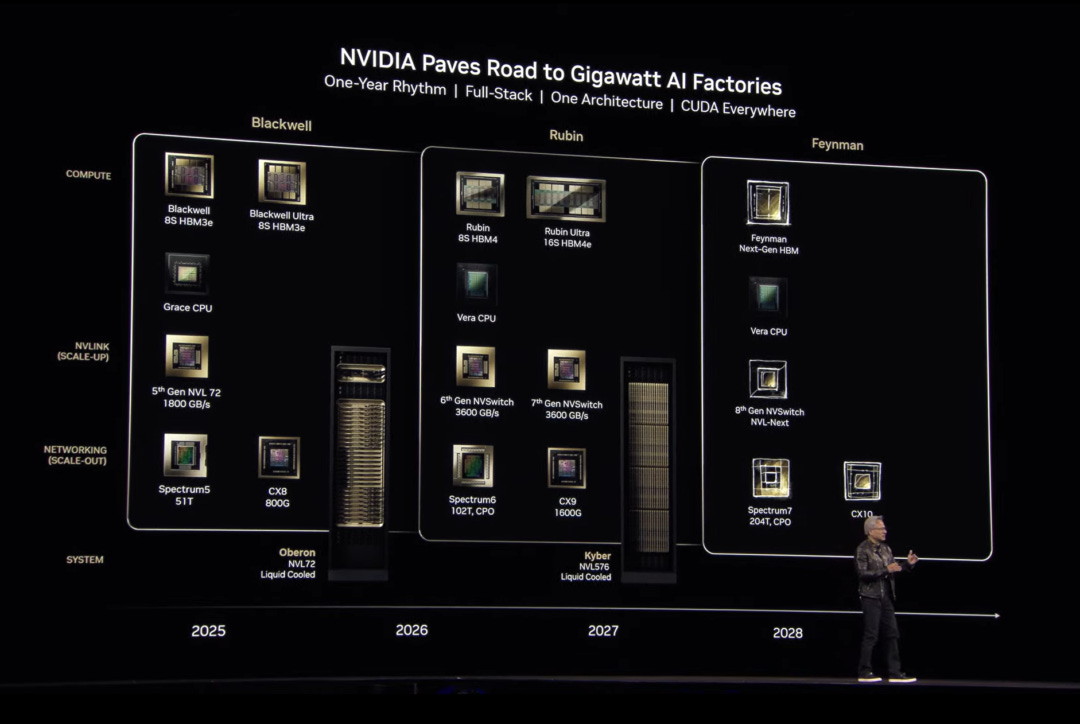

And the company is already working on what comes next, with a new version of Blackwell later this year, the Vera Rubin infrastructure next year & the Rubin Ultra around FY27, while the generations after that will be named after Feynman.

Better GPUs, more computing power, optimized energy consumption, more customization. Every year.

I did focus this write-up on the datacenter part of Nvidia, but you should know that the company kept working on other verticals. They continue to upgrade & sell GPUs for graphic & gaming usages, sell workstations or enterprise-optimized computing power units - they announced their new DJX last week, and any other hardware for many other usages. Most revenues come from datacenters, but it isn’t all they do.

They work to give access to computing power for the best value to everyone, from multi-billion dollar infrastructures but also for a few hundred bucks. For massive corporations & individuals.

Computing power for all.

Syntethic Data.

I’ve said it already, many times: data is one of the most important resources of the world at the moment. And we don’t have enough of it.

It’s not that we can’t have it; it’s just that we’d need too long to capture the amount we need to train models. Even very simple actions from a human perspective require a huge amount of data to train a model. This data can exist for some tasks, but it doesn’t for all of them, not in the required amount or not free to use.

Nvidia chose to take a different approach & developed two platforms, Omniverse & Cosmos, whose objective is to generate data from real data. Let me explain.

Omniverse is used to build 3D worlds based on the real world, to create digital twins - a virtual model of a real place like a factory, for example. Cosmos will use those 3D worlds to generate realistic use cases & generate content based on this digital twin.

You probably get the gist. Nvidia is using a piece of real-world data to generate more data through those two services. They create the data they need & do not have in an instant instead of spending days or weeks to record it.

In brief, we get to train robots with data coming from simulations. What would take days to create the necessary data can be done in a few minutes using both Omniverse & Cosmos.

Nvidia uses AI to create the training content physical AI needs. This obviously requires tons of compute, be it to generate the content or to train the models.

The loop is kinda closed for a company who wanted to revolutionize the 3D world. They did so & can now use their 3D models to create their next success story: The training of physical AI.

The Vision.

So where are we at? GPUs were developed for parallel computing, CUDA made a big difference, hardware improved, we now can train models & make them use tokens in input & output to realize what we need them to do, all thanks to GPUs. Why do we need more & what do we need it for?

Jensen spent some time talking about this during his keynote last week & said this.

“The computation requirement, the scalling law of AI is more resilient and in fact hyper accelerated. The amount of computatino we need as this point as result of agentic AI, as result of reasoning, is easily a hundred times more what we though we needed this time last year.”

The reason for this is that we have had generative AI for some years now, but they still are incomplete & regularly inaccurate. We used so much compute for imperfect tools. They sure are getting better since their engineering was reviewed to verify their own answers through different methods before sharing them, and we can see it nowadays with Grok for example. As a result, many times more tokens are used compared to a year ago to limit hallucinations - wrong answers. But it’s not enough; we still need more only to perfect what we already have.

The more data used during training, the better the models. The more repetition of any task, the better the output. More of this doesn’t necessarily mean a need for more computing power, but not increasing computing power means we’ll need longer to train or model or to get our outputs during inference.

More tokens mean slower training or inference as the pace is limited by GPU capacity. Generating X times more tokens to increase the accuracy of current models in training or inference while keeping the answers as fast as they actually are will require dozens times the computing power currently deployed.

No one likes to wait, and it starts to show. Demand for Nvidia’s hardware continues to accelerate. They sold almost 3 times more Blackwell GPUs since available less than a year ago than Hopper GPUs in its peak year, only including the top 4 Cloud Service Providers, excluding every other client.

And we have to add to this many other AI verticals as this is only about what already exists, but what about the next steps? What about the thousands or millions of apps which should migrate from software to models? What about the democratization as more & more users access those models, and what about the physical AIs which will increase in the next decades, robots & autonomous vehicles, trained & working solely on computing power?

The last ten years, we’ve seen advances in research, we knew things & made them better. The next ten years will be about application. We’ve grown AI to be usable, we now get to use it, and it is very hard, almost impossible, to foresee where the next decade will bring us.

“What can you learn from data?” is the question left to answer.

It’s also impossible to quantify the future need for computing power. It won’t stabilize nor decline, and as Nvidia develops better & better hardware, the world will certainly have to rely on it to push the limits. To bring AI to the next step, both in training & inference.

Risks & Interogations.

Competition.

As in most domains, you have competition when it comes to Nvidia & their GPUs. They were the first, and they remain the biggest provider today with a market share north of 80%, and there is a reason why: CUDA.

The biggest competition to Nvidia at the moment is AMD, and even if they can compete in terms of hardware capacities, they cannot compete with the entire infrastructure Nvidia is capable of selling.

As hard as it is, this entire business is about optimization & companies want to work with the best because if they don’t do so, they will fall behind as their system will end up being less performant.

Even at equal hardware, CUDA makes such a difference that it doesn’t even matter if AMD’s hardware was better; it would still fall behind after Nvidia’s GPUs are optimized with CUDA & its 900 libraries.

This is why Nvidia has so much market share & this is why they’ll continue to do so in the future, because developing CUDA took more than 15 years & its network effect is now too strong to be taken over by a new player. Nvidia’s installed base continues to grow, which reinforces its network effect. Some can bet that AMD or anyone else will dethrone Nvidia, but I personally don’t believe it in the near future & most network effect stories tend to go my way.

Far from me to say that AMD’s GPUs are useless; they are not. They still can be used as cheaper alternatives to Nvidia for different kinds of compute - and are, but when it comes to the biggest chunk of the market, it is all about Nvidia & will continue to be as long as there are no alternatives to CUDA. And even when an alternative rises, it will take time to push CUDA away.

Besides AMD, many other companies are trying to work on in-house GPUs, mainly the Mag7, with Amazon, Google & Microsoft taking part in the game. But like AMD, they can develop powerful GPUs, but they’ll continue to rely on Nvidia & CUDA for most of their workload, because they don’t have an alternative & not relying on the best would put them behind.

And like no one wants to wait, no one wants to be behind.

Will Investments Continue?

There are many conversations about a potential bubble in AI infrastructures and the need for companies to continue with their actual Capital Expenditure. Do they really need more of it, and can they monetize it properly?

At the end of the day, not every company will buy GPUs; they’ll rent them just like they rent cloud services. That’s what Nebius proposes, for example.

They buy GPUs, install them in their own infrastructure & rent their usage to clients, for both training & inference - for whatever they want them for. They usually bill per token generation & we already explained that the more advanced AIs are, the more tokens they use for both training & inference as they second-guess themselves to be as accurate as possible. This is step one: tons of tokens for accuracy.

When we browse the internet, we won’t wait 30 seconds for an answer. As I personally use LLMs more & more, I get annoyed when they take more than 1 minute sometimes for an answer to a question I judge simple. As technology advances, our expectations rise. And this is only in the case of LLMs, but it will be true for physical AIs as well - you certainly do not want your car to turn or stop one second too late because it didn’t have enough compute to make it in time. Certainly not. And all of this will obviously require a good amount of compute to get there. This is step two: tons of compute for speed.

In brief, you want to generate as many tokens as fast as possible. The street believes that training is the most GPU-intense service. And this might be true for now, but as I shared earlier, models are not really great yet. The better they get, the more compute they use & when you add those two steps together… You realize that inference isn’t cheap in compute, far from it - the example in this video confirms it.

The better the model, the more compute is needed. We’re talking 20x tokens & 150x compute in this example. To this, we need to add the volume of users.

My take is that no one knows how much compute will be needed for inference in the next years. We have an idea of what training requires, but we do not know how much compute great models will consume, nor how many models will be created & used, nor how many people will use those models simultaneously.

What we know is that today’s installed compute isn’t enough, and this is confirmed by providers like Google, Amazon, or OpenAI, which cannot satisfy the actual demand for both training & inference while the number of models deployed & real-world use cases widely available are few. What happens when we have thousands, millions of apps & billions of users on the most advanced models?

My take is that it’ll need multiple times what is already installed in terms of computing power. As for whether those buyers will be able to rentabilize those investments? It really isn’t today’s subject as we’re talking about Nvidia here, not Google or Meta. But as I shared throughout this write-up, I personally believe some will - but not all.

Innovations Means Less Compute.

In his last keynote, Jensen called himself “the revenue destroyer” and said that his sales team would hate him as his company kept selling better & better hardware, capable of more compute for less. This is about the hardware.

But it also happened recently with DeepSeek’s model, an LLM much more performant than ours, which consumed much less computing power for its training - so they claimed. I don’t care about whether the story is perfectly accurate or not; the facts remain that Chinese engineers found ways to train their model more efficiently. The street interpreted this as “we can do more with less” hence no need for so such infrastructures. I’ve said at the time that it wasn’t the right interpretation & I continue to believe it.

We can briefly talk about Jevons Paradox. Here’s Grok’s brief take on it:

“Proposed in his 1865 book The Coal Question, the Jevons Paradox states that when technological progress increases the efficiency of using a resource (making it cheaper or more effective), the total consumption of that resource often increases rather than decreases. This happens because lower costs boost demand and usage, offsetting the efficiency gains.

Core Idea: Efficiency → lower cost per use → wider adoption → higher overall consumption.”

As cars got cheaper, they ended up being bought more often & the entire industry did grow, not decline. As computers got cheaper, they ended up being bought more often & the entire industry did grow, not decline. Both got cheaper but also more powerful, and as it happened, we ended up using them for more than originally because our world continued to innovate.

This is the concept behind Jevons Paradox, and it shouldn’t be different for AI.

Some could argue that it is different as everyone doesn’t need or want a GPU at home as much as they need a car or a computer. I’d answer that this is true, physically, but it isn’t true in terms of usage. The fact that you don’t need it at home doesn’t mean you won’t use it; you will simply use it through third parties, exactly like you are using ChatGPT on its web app.

Add to this my entire case about training better models & inference, plus the amount of simultaneous usage we’ll have for those models.

Jevons Paradox was true for cloud services. Why not for AI?

Regulations.

This is another risk, but it isn’t directly tied to Nvidia’s business; it is about the global geopolitical environment & governments. As you understood through this write-up, the company is selling one of the most important pieces of hardware for our generation’s future innovations.

This is why there is so much demand for it, but while companies focus on revenues, governments have entirely different concerns and the United States understood how important Nvidia’s GPUs are and forbade the company from selling its most advanced ones to competitive countries like China.

There are different rules based on computing power, licensing, geography, etc. The rules are a bit complicated & I don’t know them all, but the bottom line is that Nvidia cannot have a normal business with a few key partners, which obviously slows down its potential growth.

Once more, this is external to the company & they have no control over it - besides engineering chips that can be sold while respecting the restrictions, but it’s important to mention it as it certainly won’t change for the better.

Financials.

This will be a rapid & boring part because when you sell shovels to everyone during a gold rush, well… You make tons of money. And even better, when you’re the only one selling shovels, well… You sell them at very high margins.

And that is honestly all there is to say.

We’re talking about a company growing 67% CAGR over the last five years. Impressive but this won’t continue at this rate for the next. As we’ve seen, the AI frenzy started in the early 2020s - it took time for Nvidia to develop its chips & for companies to adapt & find ways to train models after AlexNet in 2012. Datacenter revenues started to grow slowly, the explosion came once it was crystal clear that they sold the shovels everyone needed.

From there, some would say Nvidia took advantage of its position, truth is they had so much demand for their hardware they had to filter it somehow, and the best way to do so is with pricing. So they did, which allowed them to reach margins above 50%, which is simply insane.

This generated insane amounts of cash, which the company reinvested in part & kept warm for the rest, with some buybacks but nothing outrageous, growing a cash pile now worth $33B of net debt.

What more should I say? This is what happened; as for what should come, I presented here why I believe growth is far from done, and as for the margins, I see no reasons for them to decline as long as they have a monopoly on high-end GPUs. Meaning continuous cash generation, cash pile, probably growing returns to shareholders, hence growing EPS & FCF per share.

That’s about it.

Conclusion.

This was a pretty long write-up, but you should now know everything you need to know about Nvidia, Artificial Intelligence & why the company is probably the best positioned to capitalize on it.

They developed the famous GPUs & the world found the best usage for them, which management understood & worked on to deliver the best hardware there is and, most importantly, the best optimization methods for their clients to get the best out of their hardware - that is CUDA.

This stack of infrastructure, including Nvidia’s GPUs & CUDA, is the best any company can have at the moment for both training & inference, and I personally don’t see this changing in the short/medium term. No company would either way gamble their lead or innovations on another product, as being late is as good as being dead.

The question about the real computing power need remains, and no one can answer it,. But if it can be any indication, actual infrastructures are not enough for the current workload and I personally do not see why this workload wouldn’t increase over the next years as we develop better models which require more training, more token generation for inference and are more & more widely used.

Data is everything. Data was & will be the source of innovation. We now have tools to use more of it, and we don’t know yet the limits of its usage nor what it could bring to our world in so many sectors.

As for where or when will this stop? No one knows. No one can anticipate how much compute we will need for our world to become World 2.0, in terms of training & inference, to reach what Jensen expects & can’t be avoided.

“Everything that moves will be robotic, and it’ll be soon.”

And it’ll all be powered by Nvidia’s GPUs & CUDA.

That was pretty detailed.. Where do you get all this info from? Did you compile it yourself in this post? Very good!