Astera Labs | Investment Thesis

Optimizing AI Data Flow.

This write up will require basic knowledge in semiconductors, compute, where chips come from and how they are made. Asterea is a fabless company who’s semis are focused on resolving AI computing bottlenecks.

If those words aren’t familiar to you, or if they are but you aren’t sure of what they exactly mean… You should start here.

I’ll assume that those concepts are understood for this write up and won’t come back on them.

Optimization is Key.

We talked about this already both with Arista and Nebius, and some other stocks over the last quarters. AI requires the most optimized hardware for training and inference in order to have the best performance at the best price.

This starts with using optimized infrastructures first, with efficient energy sources, the best hardware in terms of semiconductors, networking, and AI compute. That would mean using ASML lithography equipment, TSM chips, Arista’s switches and Nvidia’s GPUs. And yet, this would not be the most optimized as there are more innovations which can optimize compute even further.

And those smaller pieces of hardware are why we’ll talk about Astera Labs and its PCIe/CXL/Ethernet solutions which are now being onboarded within all the most advanced AI datacenters of the world.

The company is focused on resolving compute bottlenecks, which are what you would think: interconnections where data transfers slows down, and as the smallest compute efficiency gain can have large consequences on training or inference, you can imagine that demand will follow, especially as their hardwares are giving great results.

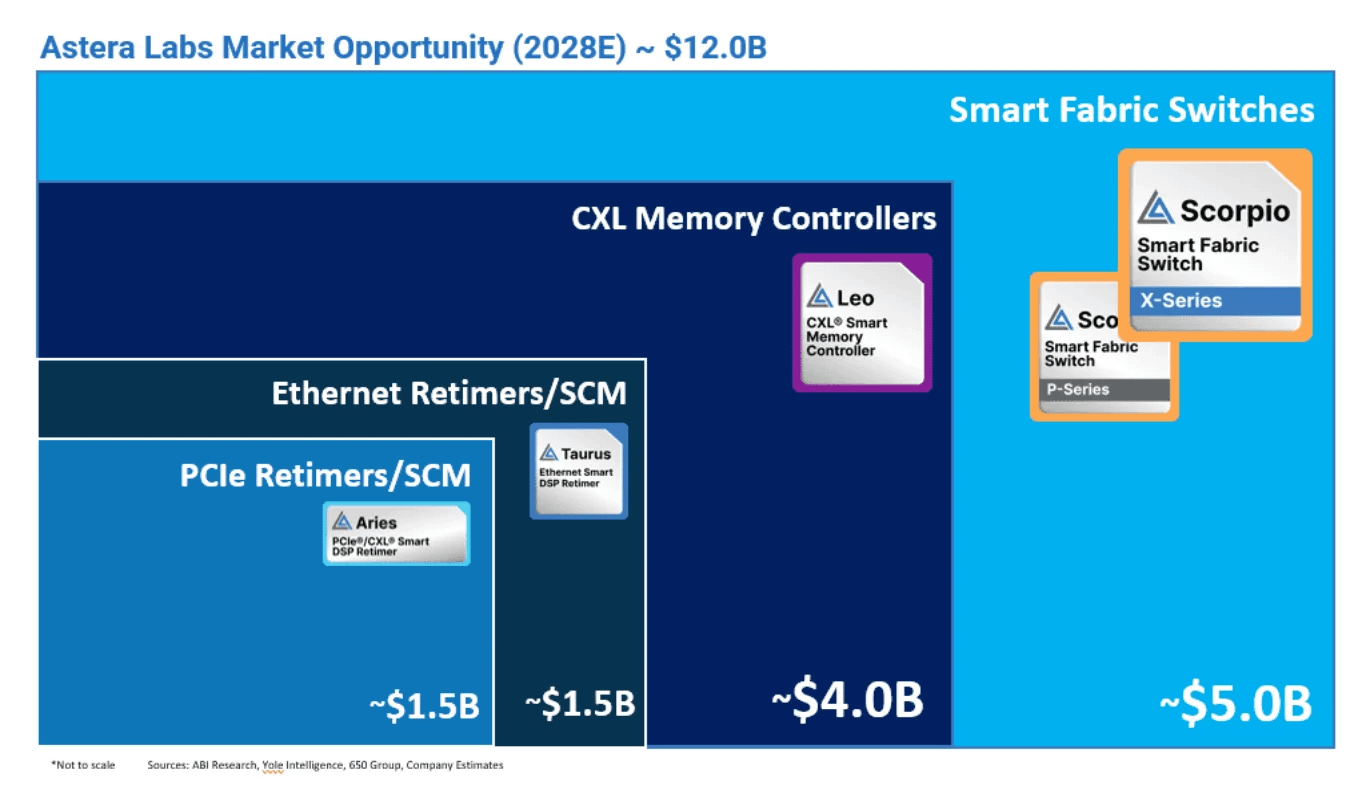

And the market is really large as we are talking about AI datacenters demand, and a sub market within datacenters: the connectivity market.

We’ll dive into the different products and why they all matter.

Products & Functions.

We’ll be technical directly as we need to understand what Astera does product per product. I’ll recap a bit what datacenter hardware is first, although we saw that in Arista’s thesis.

I’ll use an Arista switch to illustrate, for simplicity. Here’s what it looks like from within, an assemblage of different components with different functionalities - system cards, cooler, CPUs, etc… each composed of thousands of semiconductors, interconnected by even more of them.

Everything packed into a metalic box.

The interconnection of semiconductors forms hardwares.

The interconnection of hardwares forms racks.

The interconnection of racks forms datacenters.

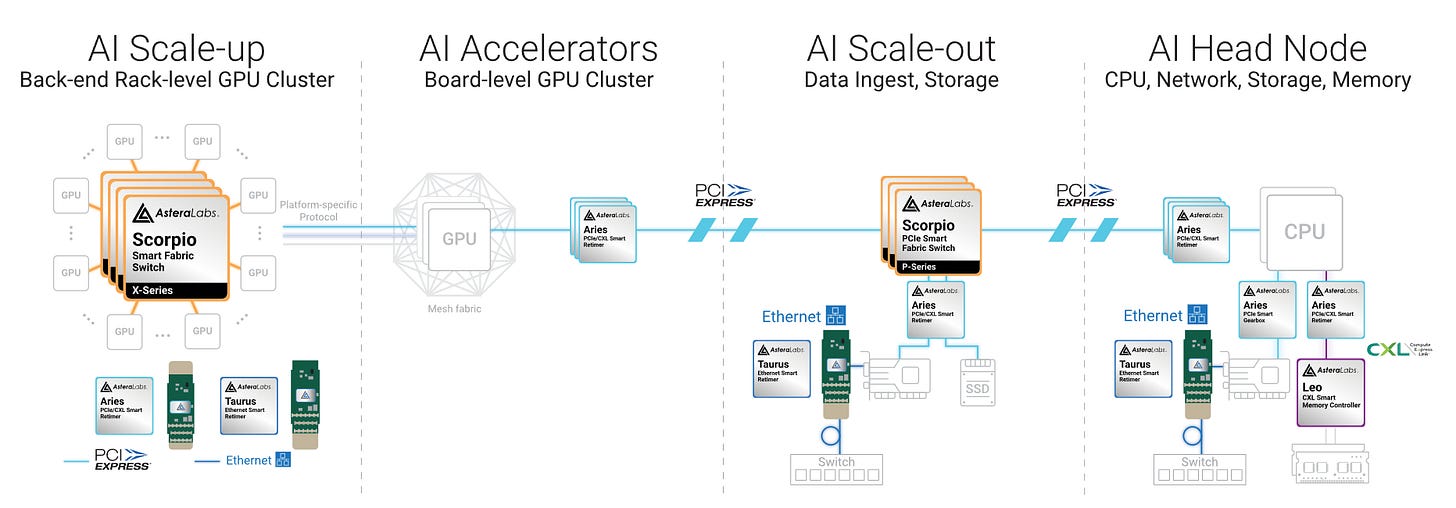

Astera Labs is a fabless semiconductor company with four major products meant to accelerate/optimize data flow within hardwares. They are all built with open standards, which means they are plug and play compatible within any hardware - NVIDIA, AMD, Intel, Micron… Within hardwares, equipments are plugged in PCIe connectors, which are comparable to electrical outlets at home. The difference is that instead of only transmitting power, PCIe will transmit data, power, clock signals.

Astera also protected its hardware by hundreds of patents, creating an edge over the long term.

Lets go over each product.

Scorpio Fabric Switches.

You’ve already heard the word switch in my Arista investment thesis as this is what the company focuses on: datacenter switches to boost AI efficiency. Astera Labs’ switches are different to some extend.

Astera’s switch are included within hadware, to optimize data flow between GPUs within a rack, while Arista’s switches are meant to optimize compute between racks. Both act as switches, with the same objective, but at different levels of the hardware chain, and are not interchangeable, despite both being called “switches”.

If I were to reuse my post office analogy from my Arista thesis, and assume that a rack is one post office and a datacenter is the world, Arista’s switches optimize data flow between post offices - racks, while Astera’s switches optimize data flow within the post offices.

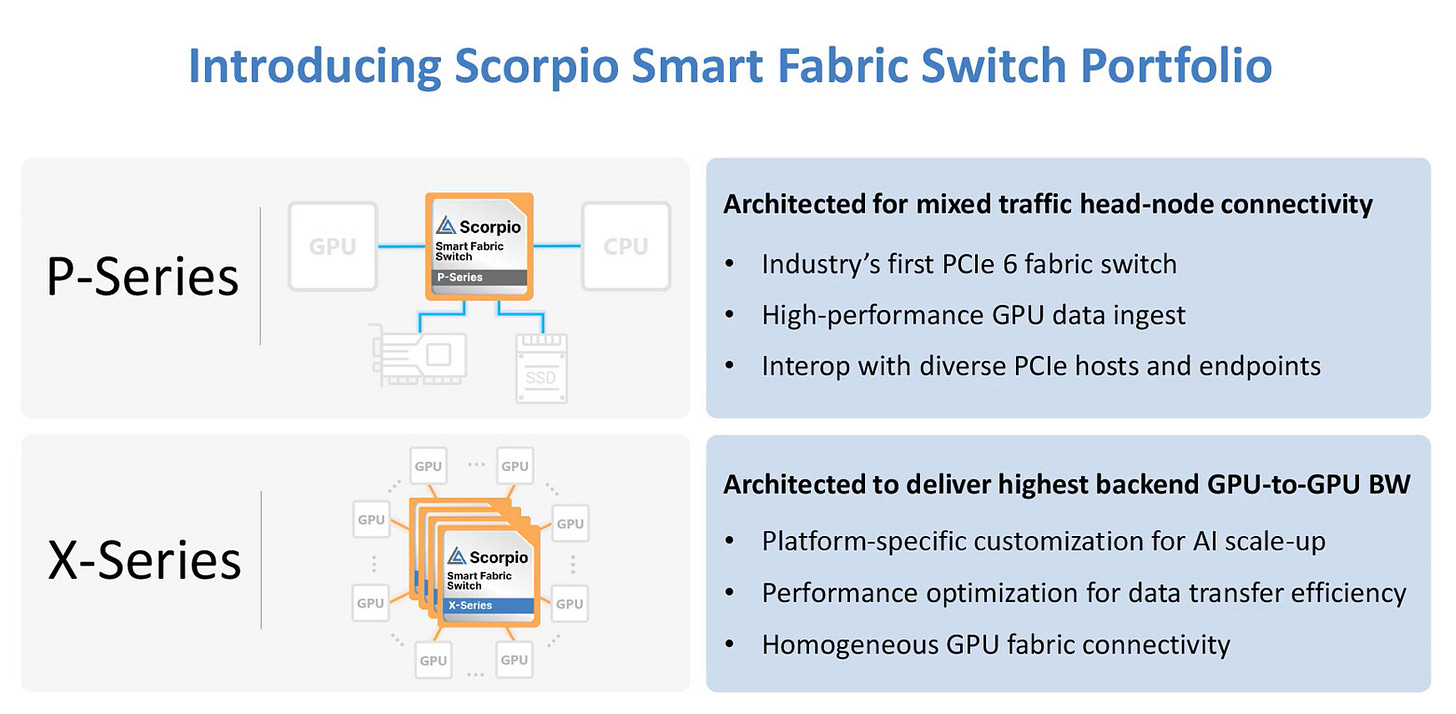

Back to the Scorpio switches. The semiconductor interconnects other semionductors within hardwares. It comes with two versions, the P-Series, meant to optimize data flow between different components - CPUs to GPUs or GPUs to SSD, etc… And the X-Series, meant to optimize data flow between GPUs only - focused for AI training and inference.

To use the proper language, compute between GPUs is called scale-up, and between components is called scale-out. Scorpio’s versions propose both, and both performed real-world tests with above-average performances due to their specialization. Both were engineered to answer AI workloads, optimized for high bandwidth, low latency and energy consumption, resulting in much faster data output with 100GB/s, around 30% higher GPU usage which is honestly massive.

Second advantage above competition, those switches are compatible with PCIe 6, the latest hardware connectivity standard emerging in GPU racks, giving Astera a first-mover advantage for next-gen hardware.

The P-Series version is already in volume production while the X-Series is planned for high volume late 2025. And as the P-Series demonstrated high performance and high demand - the reason for Astera’s last quarterly growth, there are large expectations for the X-Series.

Aries Retimers & SCMs.

Aries retimers are complementary to the Scorpio P-Series and enhance connectivity between the latter and PCIe 6.0 connectors. They come in two formats, integrated into boards - by themselves or with the Scorpio Series, and the PCIe, or as cables as long as 7m connected between racks. This inter-rack design allows to maximize GPU usage within a datacenter and not only within hardware individually.

Without entering into the details, retimers ensure signal timings - recovering data, compensating for losses, and retransmitting a clear signal, as lots of factors could derail them within a datacenter. They ensure optimized data flow to scale-up and scale-out. You could find Aries on many interconnections within or between racks.

Astera Labs showcased the usage of both hardware together at FMS 2025, with three Scorpio P-Series switches, Aries 6 retimers, and four Micron 9650 PCIe 6 SSD achieving over 100 GB/s throughput with PCIe 6.0.

This architecture allows massive GPU clusters (200,000+) for model training, with an optimization equal to intra-rack configurations thanks to their focus on latency - as said above up to 30% higher vs. legacy setups.

More compute, efficient energy usage.

Leo CXL Controllers.

Another semiconductor designed by Astera Labs in order to optimize compute, this time for memory pooling and expansion. Scary words but easier concepts.

As we established many times, AI compute requires data - stored in memory, and compute to train models which will learn by iterations thanks to millions of GPUs repeating tasks using those data sets.

Leo controllers have above-average organizing skills and enable coherent access to those data sets - creating data pools if different hardware require the same data or reorganizing who gets what.

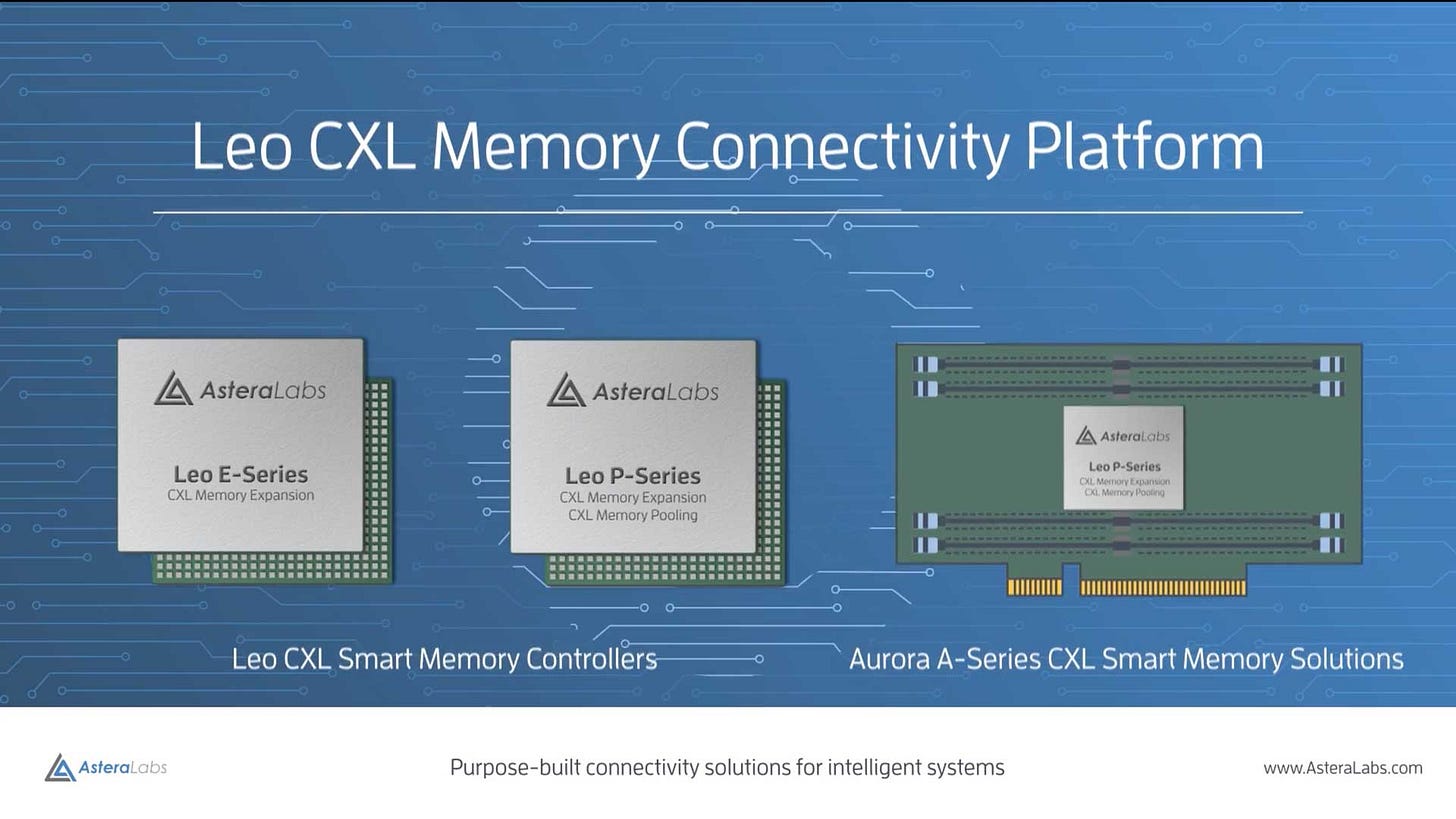

Leo controllers come with three versions, with different capacities.

E-Series. Memory expansion for direct host-to-memory connections.

P-Series. Memory pooling and sharing via CXL switches for multi-host access.

A-Series (Aurora). Add-in card (AIC) form factor for plug-and-play deployment.

Here’s what they look like; it’s always good to have an idea of what we’re talking about.

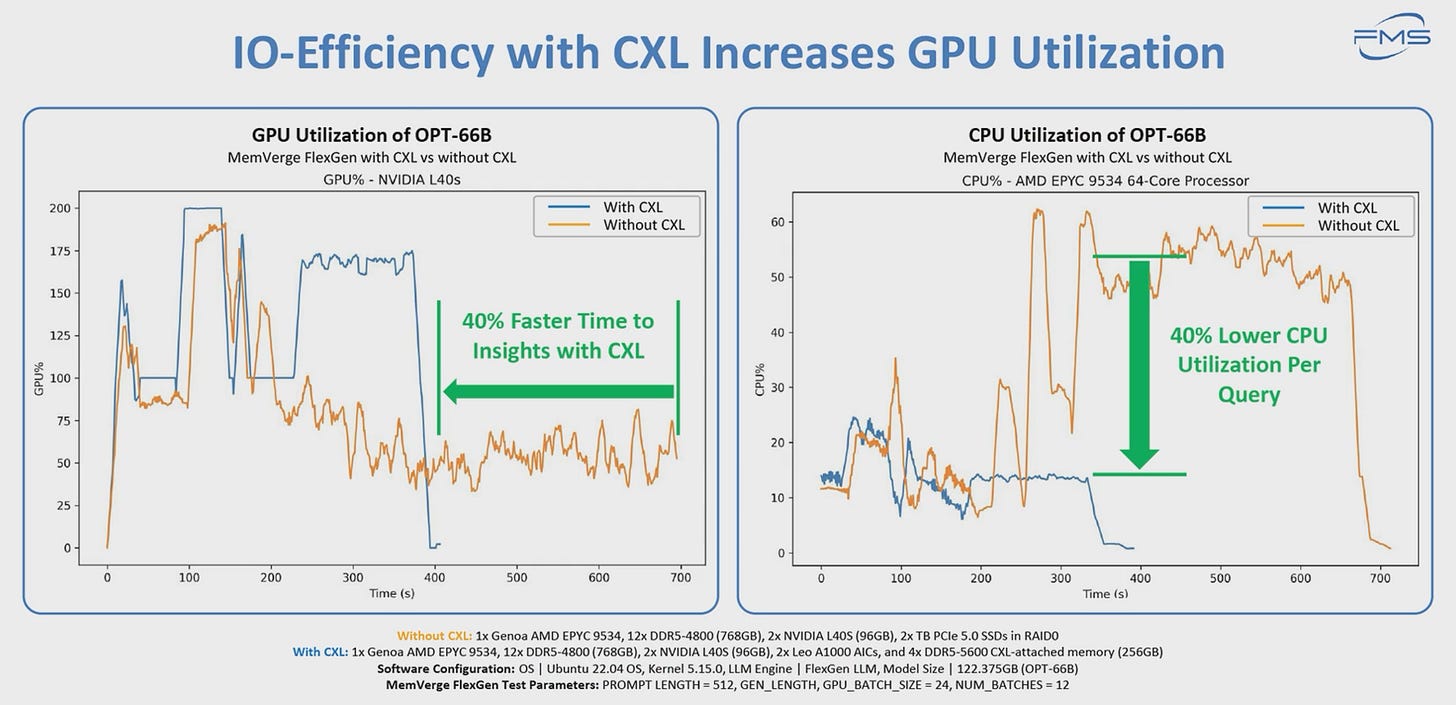

Those semiconductors are compute hardware focused on coherent access to data for GPUs, CPUs, or any computing hardware with specific connections, resulting in much faster data treatment - 40% faster inference results according to management and compared to classic architectures.

Here are the results of a test showcased last year where you can clearly see how CXL - Leo cards, accelerate the time to treat an inference request, compared to an architecture without.

This is due to Leo cards’ organization optimization. There are many more examples & real-world tests on Astera Labs’ website if you want to go further, for both CPUs and GPUs usage.

https://www.asteralabs.com/products/leo-cxl-smart-memory-controllers

Taurus Ethernet Modules.

We are back to datacenter products with cables between networking hardware. The Taurus allows connection to scale-out, between racks within a datacenter.

They are classic cables with optimized connectivity to provide the best connectivity with, as usual, high bandwidth and low latency, and real-time monitoring.

Astera Ecosystem & Cosmos.

We’ve seen all their products one by one to have a clear view of each, but we should think of them as an ecosystem with each product complementing each other with the objective of optimizing both scale-up and scale-out compute.

Scorpio semiconductors can go in pair with Aries to boost data flow between GPUs or between different hardware from the same or different racks depending on the Aries retimer and SCMs used, while Leo enhances data access for model training and Taurus connectivities accelerate scale-out towards other network points of the datacenter for compute, storage, or else.

Even more important, each piece of hardware comes with customization through their proprietary COSMOS software.

All products integrate with the COSMOS software suite, which offers advanced telemetry, diagnostics, fleet management, and predictive analytics to optimize performance, reduce downtime, and enhance security in cloud-scale deployments.

More customization & possibilities with software, hence deeper customization which is the standard model nowadays, and the reason for most hardware dominance.

Business Model & Partnerships.

As shared before, Astera Labs is a fabless semiconductor company, which means they engineer their semiconductors and pass commands to foundries like TSMC, before selling their hardware to clients, the source of most of their revenues.

Astera was founded in 2017 and did its IPO early 2024, a very young company which focused directly on resolving bottlenecks and optimizing AI computing, but their engineering is not the only reason for their recent success, which came from one specific partnership with Nvidia’s MGX and NVLink platform.

The bottom line being that Nvidia opened its system to modularity & allowed clients to personalize their compute racks with external providers, Astera being one of them. With the new P-Series’ performance, the X-Series ramping up later this year, and the Astera entire ecosystem being proven efficient, showcasing large compute gains for AI use cases, an easier integration within Nvidia’s compute rack was the last validation stamp and demand ramp Astera needed.

The company’s last quarter result proved this, as they have shown much better results than expected due to easier access to a really wide market in need of its hardware. They now power the three biggest hyperscalers - Google, Microsoft, and Amazon, and sell more hardware integrated within compute racks through Nvidia, AMD, and Intel as demand comes in different forms - for final racks or simple hardware pieces.

Demand is already really large, but their first-mover advantage with PCIe 6.0 is also contributing to it, combined with its numerous patents protecting its technologies.

Risks & Competition.

We’ve seen the company, its products, and business model, but despite an important demand and product specialization, Astera has competition and the common risks of AI datacenters - potential ceiling, slowing in spending, lack of return on investments, plus the ones of being a small capitalization - stock is tied to growth rate, potential competition from giants, dilution...

In terms of competition, the sector is really busy with corporations like Broadcom or Marvell, who both propose retimers, CXLs, and other equivalent semiconductors - Arista relies on Broadcom for its switches’ ASICs for example.

The advantage Astera has over both of them is its size. Being a small company allows you to move faster - as we’ve seen with rapid engineering for PCIe 6.0 compatibility for example, and its specialization, as its competition is usually focused on the larger market with a large demand, not specialized ones.

As of today, it seems that Astera is moving & innovating faster than Broadcom, which relies more on an already really large demand for its non-specialized semis, but they will eventually need to specialize to answer optimization demand as it will be the differentiating factor in the future, something we’ve seen with Nebius.

Astera is a step further its competition, but it doesn’t mean competition is left behind, Broadcom and Marvell have the capacities to innovate but new innovations also happen fast lately in the AI domain and their advantage could fade in a year or two.

Another risk is customer concentration, as its top three clients are responsible for more than 50% of its revenues, but those clients are either hyperscalers with deep pockets or assemblers like Nvidia, who resell built compute racks. Concentration is normal in high-end tech hardware. So we’d be back to the potential ceiling in AI spending as a risk, more than an issue about revenue concentration.

Lastly, Astera is a semiconductor play, which means exposed to potential restrictions, tariffs, and everything related to political decisions - which have been swinging wide lately…

Financials.

As usual for growth companies, financials are not the main story. But here…

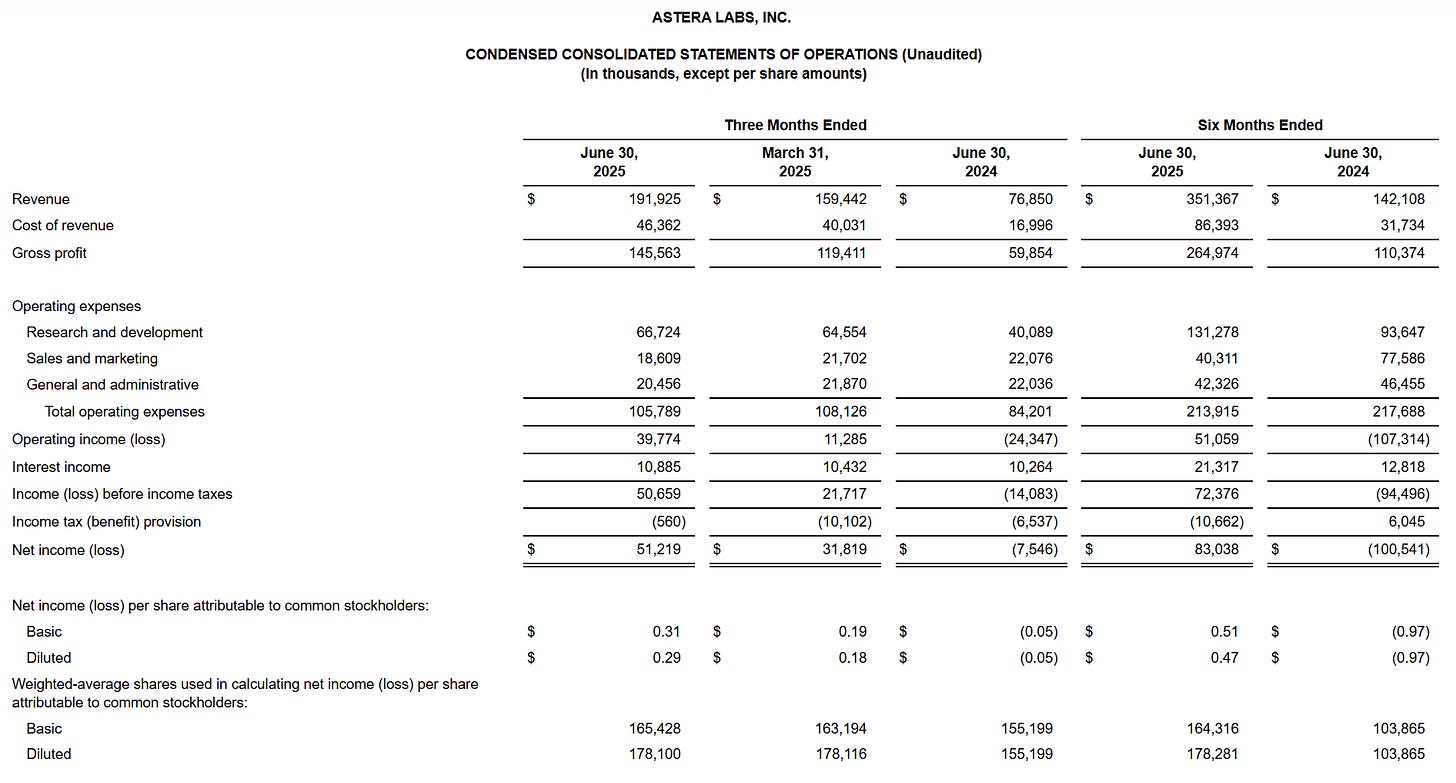

The market went nuts over its 143% YoY revenue last quarter, and with reason as the demand is boosted by its new Scorpio P-Series with the X-Series to be produced at scale during the year, hence many reasons to be positive.

The rest should be classic growth, except for… Profitability. Pretty impressive as the business itself is profitable, organically. We’re talking about 75% gross margins as we could expect from a fabless semiconductor company, large expenses in R&D which is what we want to see as we do not want Astera to lose its lead to competition, and correct sales and G&A which shows the company remains focused on spending for tech.

All of this leaves us with 21% of operating margins and 23% of net margins, boosted by their interest income from their cash position, raised at $1B of net debt due to market funding, as shares outstanding rose 73% YoY. Shareholders can’t complain as growth companies need to fund themselves, as long as growth is generated… It’s all good - in my opinion.

A pretty impressive financial position considering how recent the company is, which makes it even more interesting.

Conclusion.

Astera Labs is not a new player in the semiconductor field but is relatively new in the main scene as their success came with their latest product. The positive is that as this product gets traction, their entire ecosystem will too. When you specialize in compute optimization, if your clients use one hardware, they have an interest to use your entire ecosystem from A to Z as it will multiply efficiency compared to pairing one of your hardware with another constructor’s.

So as the Scorpio P-Series gets traction, the Scorpio X-Series attracts interest, and if both continue to showcase strong results, it will create a virtuous cycle for Aries, Taurus, and Leo, and boost growth exponentially.

This should be true as long as demand for AI compute optimization remains strong, and as we’ve seen countless times by now, it should at least for the medium term, except black swans.

There still are inherent risks to the sector, risks tied to the company’s capitalization & expectation, valuation at time of writing, and competition as the giants Astera is going against have the means to catch up on their technology. Will they spend to do so, is it in their business plan and interest? That is another question, but it is possible, and if we choose to invest in Astera, we will need to have a close eye on those giants’ new hardware but also on smaller companies, as innovation goes fast.

The name remains really compelling to me, and I would start a position if I were given an opportunity in the short term. And I strongly believe Astera could be a great long-term hold, at least for the AI datacenter build-up period, which should continue for some years for the reasons described in my Nebius investment thesis.

Which is why I continue to look at AI hardware companies. This new technology is just getting started.

This one is quite technical, thanks! Didn’t know there are switches in the rack too. Really quality thesis on your choice AI hardware companies, maybe it’d be helpful to have an overview on where they are in the AI ecosystem. E.g. ASML at the uppermost stream, Nvidia at the core, Nebis is sorta selling the big data center package, along with the security players. Just an idea! Thank you as always.