Arista Network | Investment Thesis

The AI Datacenter King.

As we continue our dive into the AI hardware world, we’ll go a bit deeper on how datacenters work and how AI transformed them, leading to new opportunities as legacy hardware is not optimized for this new usage.

As we’ve seen in ASML & TSM thesis, semiconductors are everywhere and datacenter hardware is nothing but the interconnection of thousands or millions of them with other tech pieces, carefully engineered to deliver the required workflows.

Those two giants are the first step to manufacture chips, and Arista is another step to make AI real, using chips to deliver the best datacenters’ connectivity possible.

Datacenters Composition.

We’ll have to start with what a datacenter is, as it is a bit of a black box for many. I’ll have a bit of an edge on the subject as a network engineer, but I'll aim to make it digestible. We’ll start even higher and define what the internet is.

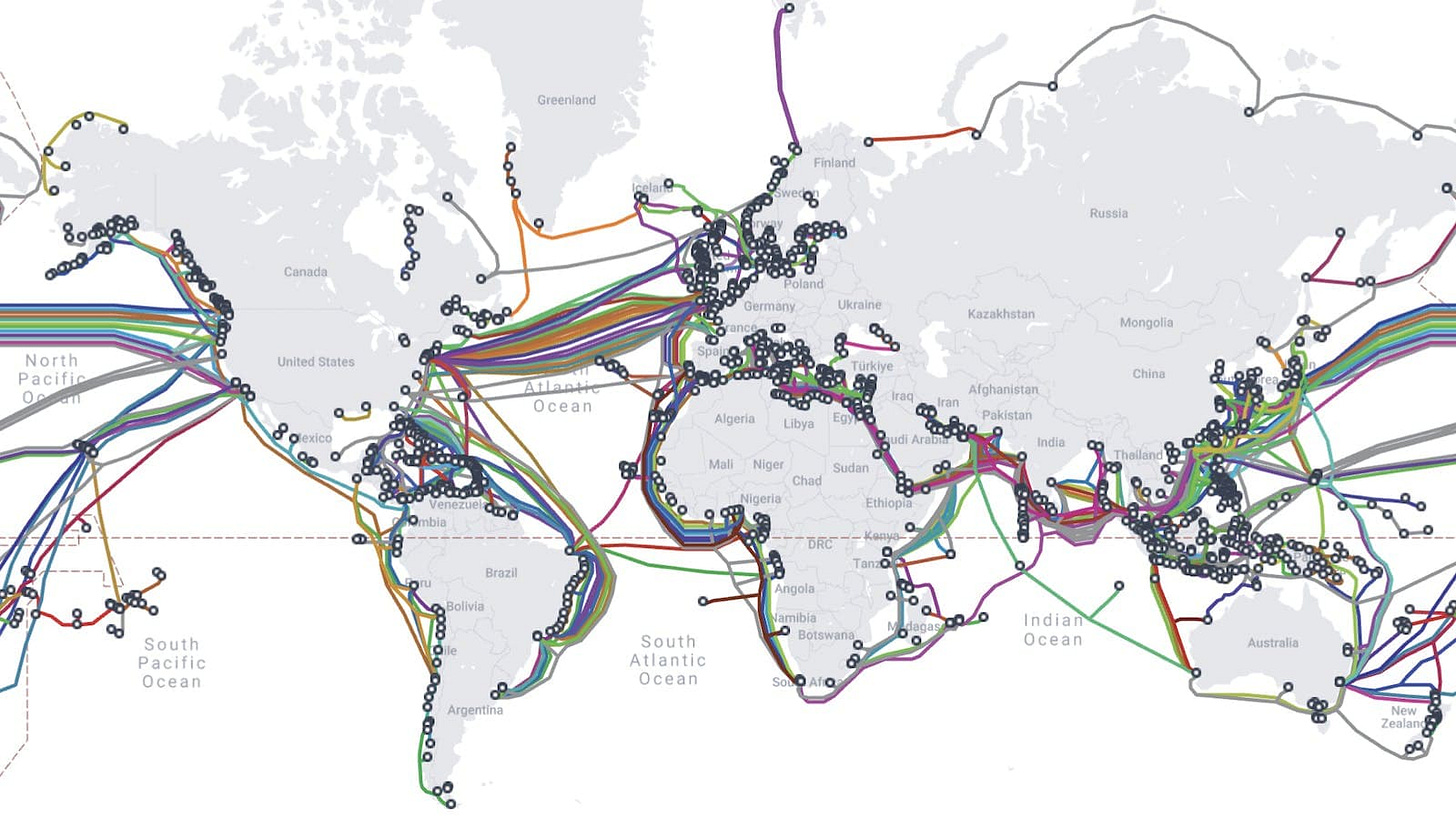

In a few words: optimized interconnections of datacenters through physical and wireless connections. Most communications are physical, through physical cables, many of them underwater between continents, and many more on land.

This is usually unknown.

When you go to your computer and open a browser or an app on your phone, your requests will bounce between datacenters who will ask each other, “Do you have the answer to this, or know who has it?”, exactly like you’d ask your friend about a quiche recipe, so she would ask someone else and someone else, until reaching the person who has it and will share it back through the same chain.

Iinternet works like this. You wouldn’t believe the number of kilometers traveled by your data and the amount of hardware it passes through before updating your app. Everything is optimized nowadays; data is not bouncing randomly, we have central entities dispatching requests with perfect accuracy, accelerated by our technological improvements these last decades, but I believe we all remember a time when things were much, much slower.

You can compare datacenters to post offices. Letters are sent to one, transit between a few more, pass through hundreds of hands before reaching their destination, while everything is transparent to both sender and receiver.

That’s a very gross overview of how the internet works, by interconnecting thousands, or millions of datacenters which ask questions to each other nonstop, in an optimized fashion.

And datacenters are composed of different hardware, with different roles, exactly like a post office would have workers with different responsibilities. Some are here to filter, others to organize, others to deliver, etc.

Switch.

They physically connect hardware within a datacenter. They know who is who, what is where, what can go where, what can’t, and make the data flow in the most efficient way, depending on their configuration.

Here’s what a switch looks like.

Each port will have a physical interconnection - cables, to another piece of hardware that needs to be interconnected with the rest of the network.

You can view networks as neighborhoods within a datacenter or between datacenters, like you would have different teams within a post office where workers interact with each other, and less or not at all with others. The same is true for datacenter networks, as some equipments will need to interact with each others more than with others.

Switches are aggregators.

Cisco is the most known and used constructor; you will find their hardware in all large companies’ datacenters.

Firewalls.

They are the network’s gatekeepers. They’ll allow or block data flowing within the network. They act like clubs’ bouncers. You’re too young? Go back to bed.

They can come in hardware, software to be installed in a server within the datacenter or even SaaS, managed by a third party.

Many constructors took the lead over the years: Checkpoint, Cisco, Fortinet, Juniper… Nowadays, the best solution seems to come from Palo Alto, and we’ll talk about it in detail with my investment thesis on the company in a few weeks.

Routers.

Routers are the brain of a network; they can be compared to Google Maps; they know where each piece of equipment is and how to reach it efficiently.

They come less and less as hardware, and the logic of routing is now integrated within switches and firewalls, but some hardware dedicated to routing is still necessary in specific cases.

Other.

There are many more pieces of hardware - servers, cables, load balancers, etc…, but these three will be enough for the rest of this write-up, for an overall comprehension of a datacenter and how it works.

Switches physically interconnect hardware within a datacenter, including connections with other datacenters; routers will map the networks and know who is where & how to reach any resource efficiently, and firewalls control data flow to secure the network, from within and outside.

Artificial Intelligence Datacenters.

AI datacenters require the same hardware - switches, firewalls, routers, servers & co. What changes is their requirements and optimizations, as AI datacenters’ needs are not equivalent to traditional ones.

Classic datacenters focus on general compute, but AI datacenters shuffle terabytes of data between nodes, where microseconds of delay lead to hours of lost productivity and costs.

The most important factor for AI datacenters is the data flow, speed & quantity. They need more data, at a faster pace, leading to faster trained models & faster inference answers, both being the ultimate goal for players in the field. And they need it with efficient energy usage, to offer this service at the best prices.

These new requirements led to a complete reshaping of datacenters which started a few years ago, with Nvidia’s GPU clusters as their new sun, requiring more efficient hardware in between in order to allow those GPU clusters to communicate at the fastest possible speed.

Higher bandwidth. Lower latency. More efficient energy consumption.

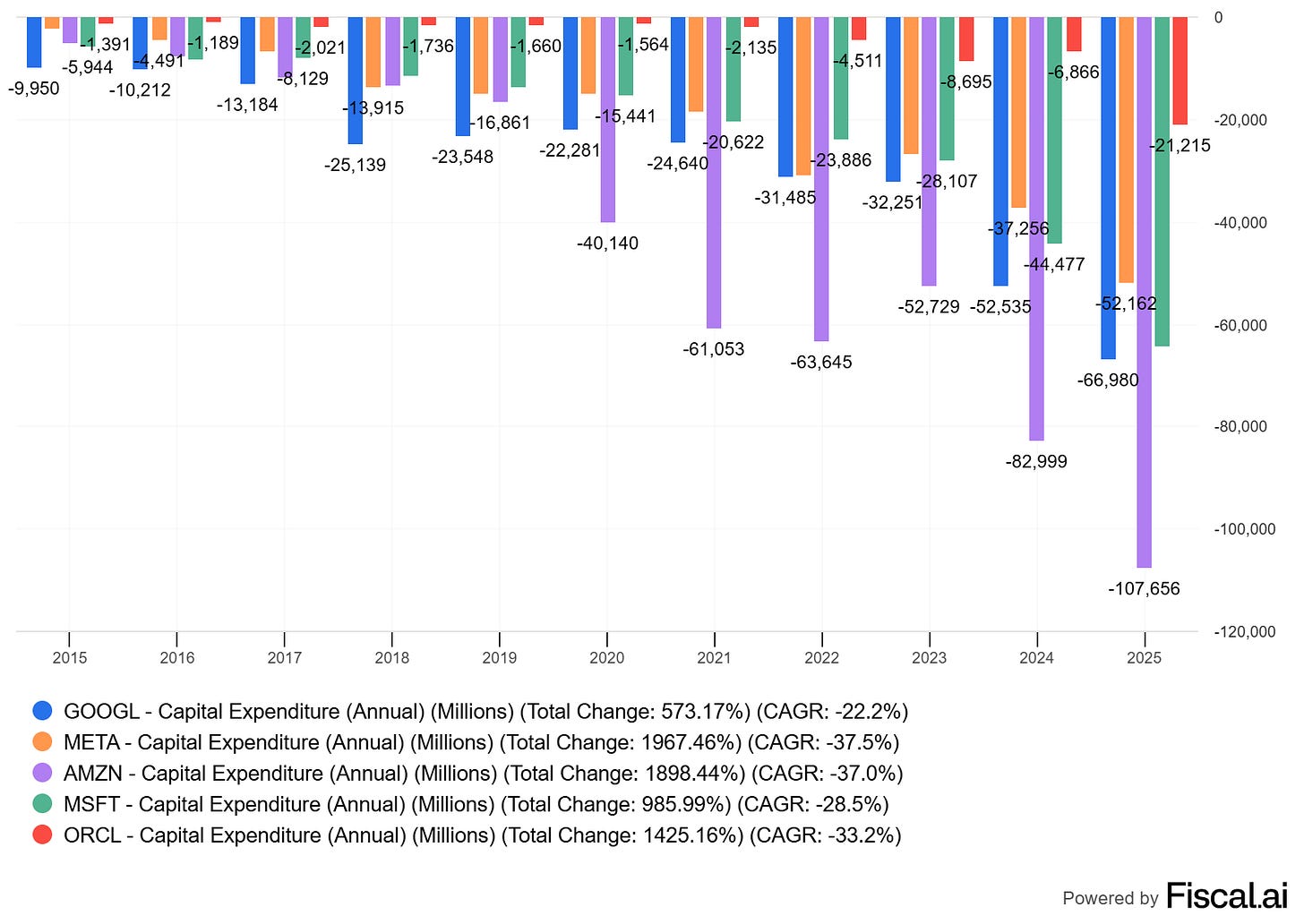

Both neoclouds and hyperscalers are building those datacenters to meet these new needs, and it is expensive as displayed by their already large and growing CapEx.

It is hard to know how advanced we are in building those datacenters and how much more compute is needed to bring AI services to everyone. What’s sure is that they do not have enough yet. It doesn’t mean that CapEx will continue to grow at that pace indefinitely, but over the long term, we need either more datacenters, or better-optimized ones.

Both hyperscalers and neoclouds are planning to increase CapEx next year mostly on dataceneter hardware as most of them continue to say that they do not have enough compute to answer demand. And others will follow in order to remain competitive in the most important revolution of the decade.

Some analysts estimate Arista’s market could grow from between $10B-$15B FY24 to more than $50B FY30. I don’t trust analysts, but the trend is clear. Cyclicality is to be expected, but demand for optimized hardware will continue to grow.

Arista Networks.

Arista is a switch manufacturer with technologies focused on a niche usage, built with niche chips - ASICs, for optimized AI and cloud use cases. They also sell routers and other services focused on datacenter networking.

If you don’t know what ASICs are, you’ll find answers here.

Arista proposes what every AI-focused datacenter is looking for: High bandwidth. Low latency. Efficient energy consumption. Without proprietary lock-in, which is pretty important as early stages of AI require experiencing different partners & techs.

Hardware.

I will not go through all the hardware Arista proposes, as it isn’t interesting from our perspective. It would be in early investing stages, but the market gave confirmations that they are the best at what they do and that their clients are satisfied, so we don’t really need to dig deep and understand it all.

Arista proposes lots of different switches, routers, platforms for AI datacenters and for classic ones. Companies will find what they need as Arista proposes solutions focused on latency, outputs, bandwidth, etc., covering a wide range of use cases.

Software.

Like Nvidia’s CUDA, Arista’s EOS is partly what sets its services above competition.

“Through its programmability, EOS enables a set of software applications that deliver workflow automation, high availability, unprecedented network visibility and analytics and rapid integration with a wide range of third-party applications for virtualization, management, automation and orchestration services.”

Once again, I won’t go deep into the details as what matters is the adoption trend and customers’ feedback, which both show that Arista is the better system.

Customer Base and Partnerships.

The market gave us confirmations of Arista’s hardware domination, with growing market shares in its sector and a list of customers containing the most prestigious companies, AI and non-AI focused.

I won’t do the list or take screenshots; you will find the list below if interested, but know that the biggest hyperscalers are there, alongside the biggest tech/security names and many others…

https://www.arista.com/en/partner/technology-partners

A first proof of Arista’s dominance.

Financials.

I continue to believe we do not need to have the deepest technical understanding in the market; data is often enough to tell us what we need. What matters to me is to have an overall understanding of how important a product is and let the market tell me the rest.

What does it tell us about Arista?

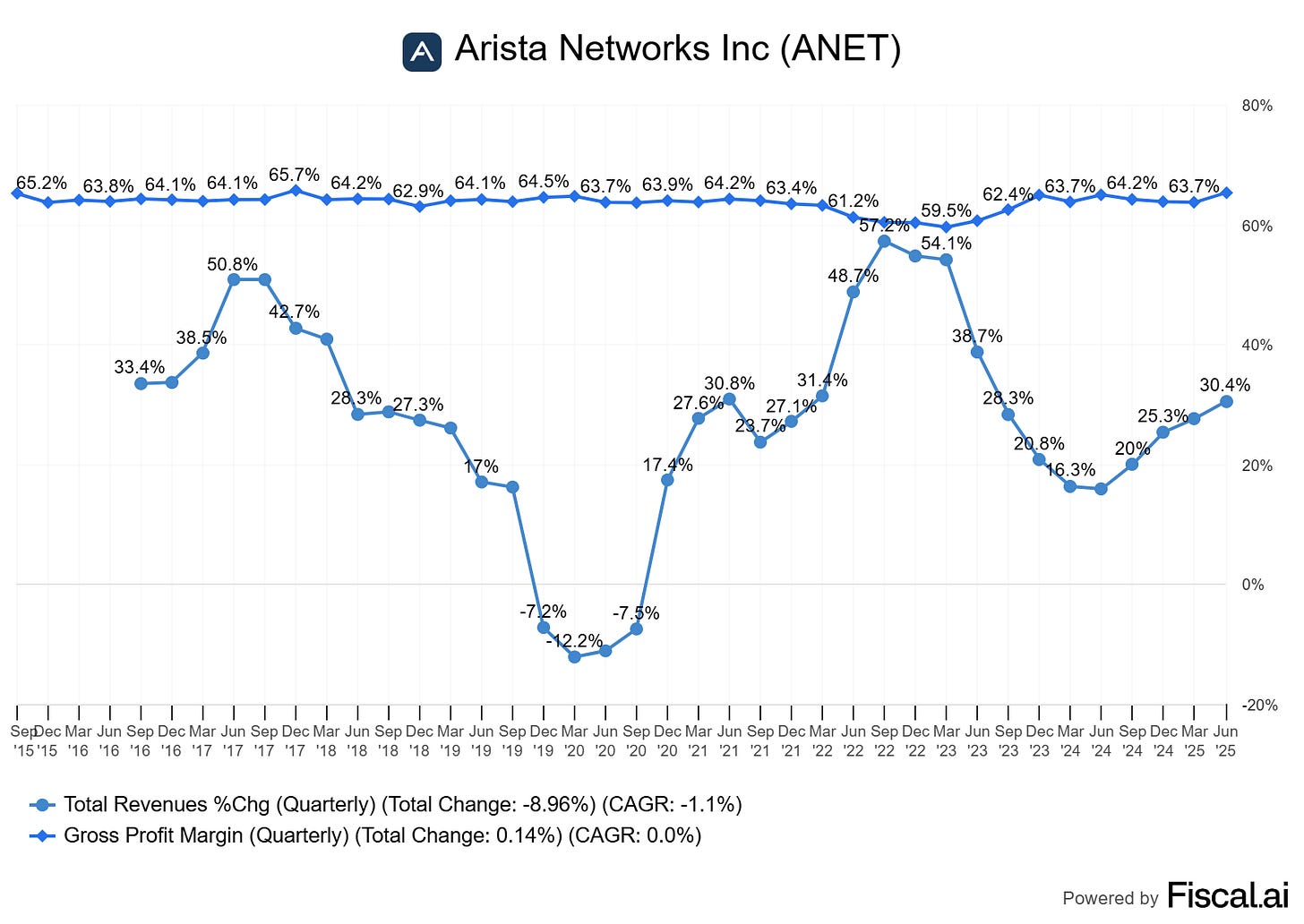

That everyone wants their products, which results in cyclical growth, like for any tech hardware. And that clients are ready to buy them at pretty high margins, again, like any dominant tech constructor. Strong demand creates pricing power.

This is already a proof of dominance. It gets better when compared with the industry leader, Cisco, who has maintained its margins above 60% but hasn’t seen constant double-digit growth for decades, which shows a clear shift in demand as Cisco apparently doesn’t meet AI-focused datacenters’ needs.

You don’t need to perfectly understand how the hardware works in details to reach a bullish conclusion.

To go further on the financials before talking about the product’s stickiness, we saw that revenues were growing really well, margins are really strong with 45%+ net margins, therefore cash generation is also strong, resulting in $8.8B net cash.

Management is focused on business growth without much return to shareholders for now, more focused on M&As after the acquisition of VeloCloud - whose price hasn’t been disclosed yet, and others in recent years.

Switching Costs.

We’ve seen what financials said about the business: faster growth than its industry leader & large margins - strong demand & pricing power. The second question is about stickiness and customer base.

Can customers migrate to new hardware easily?

If so, it means demand can shift easily towards new constructors based on the best one at a specific point in time, and it would be risky to invest in Arista without a clear understanding of what makes it better than its competition. If the product is sticky and we can understand why, the combination of strong financials and stickiness is enough - to me at least.

And guess what? It is.

Again, switches are datacenter aggregators, which means every piece of data passes through one or many of them at any point in time. They are expensive hardware & need to be installed in volume. Their configurations are intertwined and rely on an overall configuration for the entire infrastructure. They include proprietary software with specific functions working better when an entire datacenter relies on the same constructor - or at least working at their full potential under those conditions. And they require specific competencies that require human training.

I have worked within two different companies to migrate network systems from one constructor to another one. We’re talking about really large companies, hence large datacenters. Both projects took a few years and cost many millions in hardware only, not including training, engineering hours, planning, etc…

Large companies do not change core networking systems without being either forced to do so or installing a new system worth the time & costs - a clear upgrade in terms of efficiency or security.

Networking & security hardware/software is massively sticky. So if you’re the best at it and do not turn complacent - like Cisco did, you should have wonderful years ahead. Even in Cisco’s case, it took decades and an AI revolution for its industry leader status to be challenged.

Conclusion.

We’ve seen the reasons why I am bullish on Arista: strong quality acknowledgment in an exploding sector, growing market shares, top-tier partner list, strong demand for its product resulting in pricing power and high margins. Even without understanding the technicality behind it, we can reach the conclusion that it is an amazing company with an amazing service.

Without understanding the technicality, we need to be careful of any sign pointing towards a new player eating market shares. Arista’s products are sticky & it shouldn’t happen in the next six months, but eventually, they will be challenged. It could come from Cisco, from Nvidia as they are also developing data flow optimization, or from a new player, as the AI datacenter space is still new and many innovations are taking place - we’ll talk about one very soon.

Secondly, like for any AI hardware play, we have no idea of the spending ceiling from hyperscalers and others, no idea of the datacenter real need nor conversion of those expenses into revenues. I have convictions AI will create monetization opportunities, but we’ll need time for it to be confirmed, and spending could slow until then. AI hardware plays include cyclicality. And over the next decade, Arista will certainly have slower years as companies figure out if they need more compute, before deciding they definitely do so - as it always happened.

Arista is an excellent company, the go-to for AI networking hardware. A sticky product with high demand. As a long-term play, we’ll need to take advantage of cyclicality and always keep an eye on potential concurrence and data points showing a loss of market share or pricing power.

As long as this is done seriously, I personally have very high convictions in this name.

Very impressed you can carve out so much time as an engineer! Great work and hope you’re getting enough rest… so sad to see what happened in Lisbon :(