Nebius | Investment Thesis

The most optimized AI compute.

I have changed my view of Nebius over the past few months. What I initially believed to be a good narrative trade has become a long-term position, as management and their execution have convinced me that they could be here for the long haul.

It’s time to review my write-up and dive deeper into the subject.

My Previous Doubts.

Let’s start with my initial bear case and why I didn’t believe Nebius was a good long-term hold. I shared this back in March but will detail it again.

It centered on commoditization. I believed Nebius was renting GPUs, like many other companies, including hyperscalers. Over time, this kind of market is usually overtaken by larger players once they secure enough supply. This is what happened in the cloud market, and I saw no reason why it wouldn’t happen in the AI compute market.

In the short term, Nebius has a partnership with Nvidia granting early access to their hardware, which gave them an advantage. As compute demand reaches a ceiling or Nvidia’s hardware becomes widely available to other providers, I expected demand to shift back toward hyperscalers, as they are more practical with a wider services range.

I assumed Nebius would ride the early training & inference wave thanks to this access to new hardware and a demand far exceeding global supply, but would fade once the market stabilized.

My analysis was on spot somehow as even Roman Chernin, Nebius’ Co-Founder and Chief Business Officer, acknowledged in an interview that it’s their job to differentiate in an industry that sells a commodity:

Long term it will depend fully on us, how successful we’ll be in penetrating a more enterprise customers who actually cares about much more than just getting access to GPUs, and if we fail with that it’s our problem, we’ll just be commodotized anyway, because if you just provide GPUs, it’s a commodity.

This quote is from an interview conducted by M. V. Cunha at the end of August 2025. It’s one reason I changed my view on the company: they are aware of the challenges and what needs to be done. After following them for months, it’s clear they are focused on executing their stated goals.

The second reason for my shift is that I didn’t grasp the importance of optimization & personalization for AI model training and inference a few months ago, not fully. I did know some level of optimization was necessary, but I didn’t appreciate the magnitude. One extra second of compute per task or slightly higher energy consumption can lead to unacceptable results, making the difference between an economically viable service and a failure.

So it’s time to revisit the company. This time, I’ll explain why Nebius could stand, for the long term, in a commoditized industry where optimization is everything.

Before We Start.

There are a few conditions befor reading this write-up, which could be in a dedicated “risk” section, but I’d rather start with them as they are core beliefs that must be held for the investment to make sense.

AI is real. If you’re reading this, I assume you’re already convinced, as lots of my content focuses on AI. But it goes beyond just being “real.” You must believe AI will transform our technologies and has the potential to be monetized, creating an entire economy based on its services.

The market will be fragmented. It is right now, with many different models used by various actors and more innovations emerging. For Nebius to succeed, this fragmentation must persist, as a standardized industry wouldn’t require our third core belief.

Optimization will be the differentiating factor. A multitude of AI models will be used for different purposes. There may be only one or two models per purpose, but thousands of purposes would lead to thousands of models, each requiring specific expertise to be optimized for their specific use case.

As shared above, I didn’t fully embrace the third belief a few month ago. I do now. If you don’t share these - which can’t be proven with today’s data, Nebius may not be for you, as you’ll likely conclude their services will be commoditized.

Nebius’ investment thesis relies on these three core beliefs. I’ll now detail why.

Nebius History.

I don’t usually do historical context, but it’s important here, as Nebius’ legacy gives the company an unfair advantage in its market.

The story starts with a Russian company named Yandex, often compared to Google as they operate one of the largest search engine in Russia, and its Dutch holding called Yandex N.V., which had to restructure after the war in Ukraine in 2022 as its Western business was sanctioned and could not operate properly anymore.

Yandex N.V. ended up being forced to sell its Russian assets to Russian investors and resulted in a new company called Nebius, with headquarters in Amsterdam and no more ties to Russia after detailed inspections from many governmental entities. This has to be clear as it remains a bear case for some: the scrunity and thorough process the company went through should be enough to certify that all ties to Russia were cut. If this isn’t enough, the trust given to Nebius by multi-billions dollars American companies should be, as those wouldn’t use Nebius if the smallest doubt persisted.

This restructuring, completed in late 2024, gave Nebius significant tailwinds.

Compettent Teams.

Probably the most important one. Yandex was a tech company with talented teams based in two major hubs: Amsterdam and Israel. These teams, including a thousand engineers or so with expertise ranging from datacenter hardware to software and AI research, became part of Nebius post-restructuring. Once again, Yandex was called the Russian Google, hence highly talented teams.

While many startups struggle to attract talent - often losing out to hyperscalers with deeper pockets, like Meta’s recent hiring spree confirmed, Nebius inherited highly competent engineers. Many with Russian ties, which complicated their opportunities in the geopolitical climate at the time - that is a personal assumption. Most chose to stay with Nebius to build something new, made possible by their second tailwind.

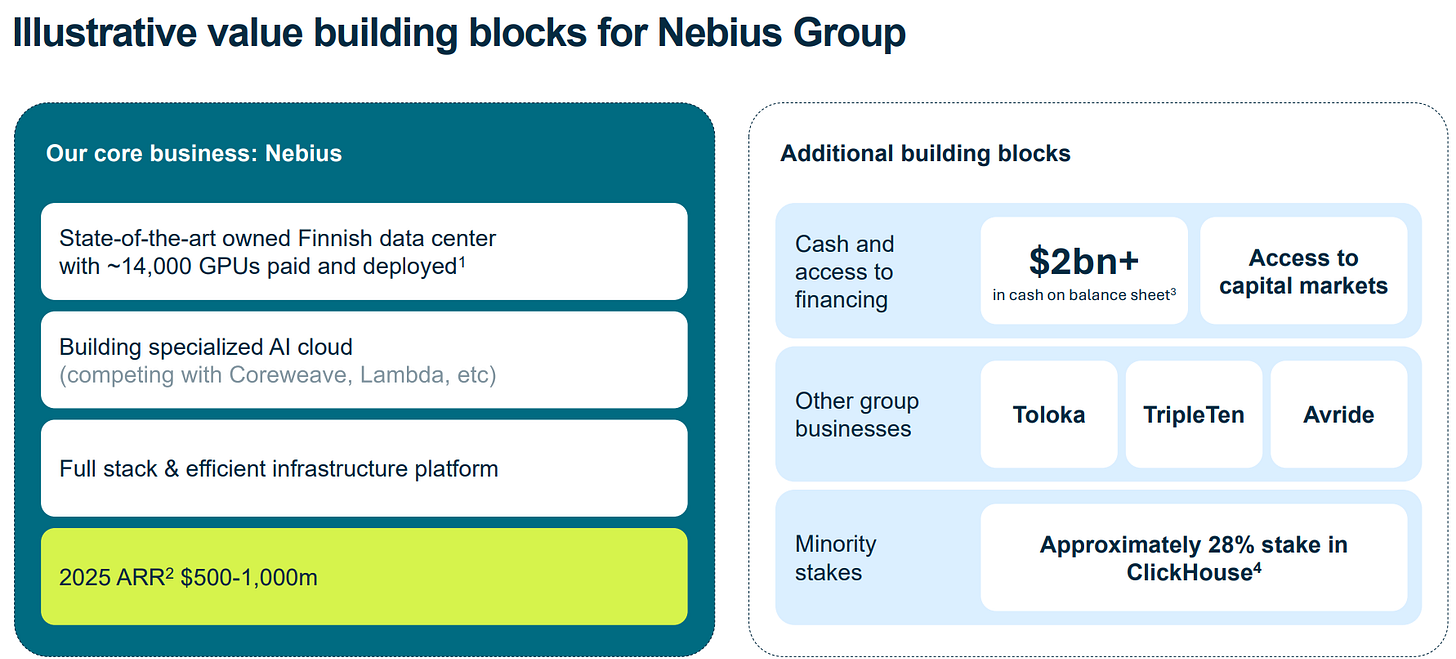

Cash & Investments.

As part of the asset sale, Yandex N.V., now Nebius, received approximately $2.8 billion in cash - an unusual position for a startup. They also retained ownership of a Finnish datacenter optimized for energy efficiency - more on this later, home to the 16th most powerful supercomputer in the world.

Last but not least, they kept ownership of Yandex N.V.’s investments - ClickHouse and Toloka, and two international subsidies - TripleTen and Avride, which I’ll discuss later.

In Brief.

Nebius started with tailwinds typically reserved for hyperscalers.

These assets allowed the company to start their business easily, with a datacenter ready to be used, cash to expand and equities/subsidies allowing them to fund themselves more easily, through the market - which they did already, or through classic debt contraction - which they didn’t yet.

Most startup would need to indebt themselves to start a business - CoreWeave being the perfect example. Nebius didn’t have to.

Neoclouds & Hyperscalers.

Two complex terms which refer to similar businesses: renting compute to those who need it. The difference lies in scale and specialization.

Hyperscalers focus on the largest portion of the market, high-volume training and inference demand without much specialization. They dominate the commodity market, and their services are often interchangeable.

We’re talking about Google, Microsoft, Amazon, Oracle & co.

Neoclouds are specialized hyperscalers. They don’t compete on volume, as they can’t match hyperscalers’ supply. Instead, they focus on specific needs unmet by their competition.

Nebius falls into this category, alongside CoreWeave, its biggest competitor.

That said, hyperscalers have the capacity to specialize and offer neocloud-like services, while neoclouds will likely never achieve hyperscaler scale. This brings us back to my bear case: depending on how the AI market evolves, specialized compute demand could eventually be met solely by hyperscalers.

Compute Demand & Supply.

Today, demand far outpaces supply, givign room for both hyperscalers and neoclouds. The question of market dominance will come later and this write-up will detail why I believe Nebius could emerge as a winner regardless.

Let’s first focus on demand and supply to show why this supply constraint will persist, giving Nebius time to scale and prove its worth.

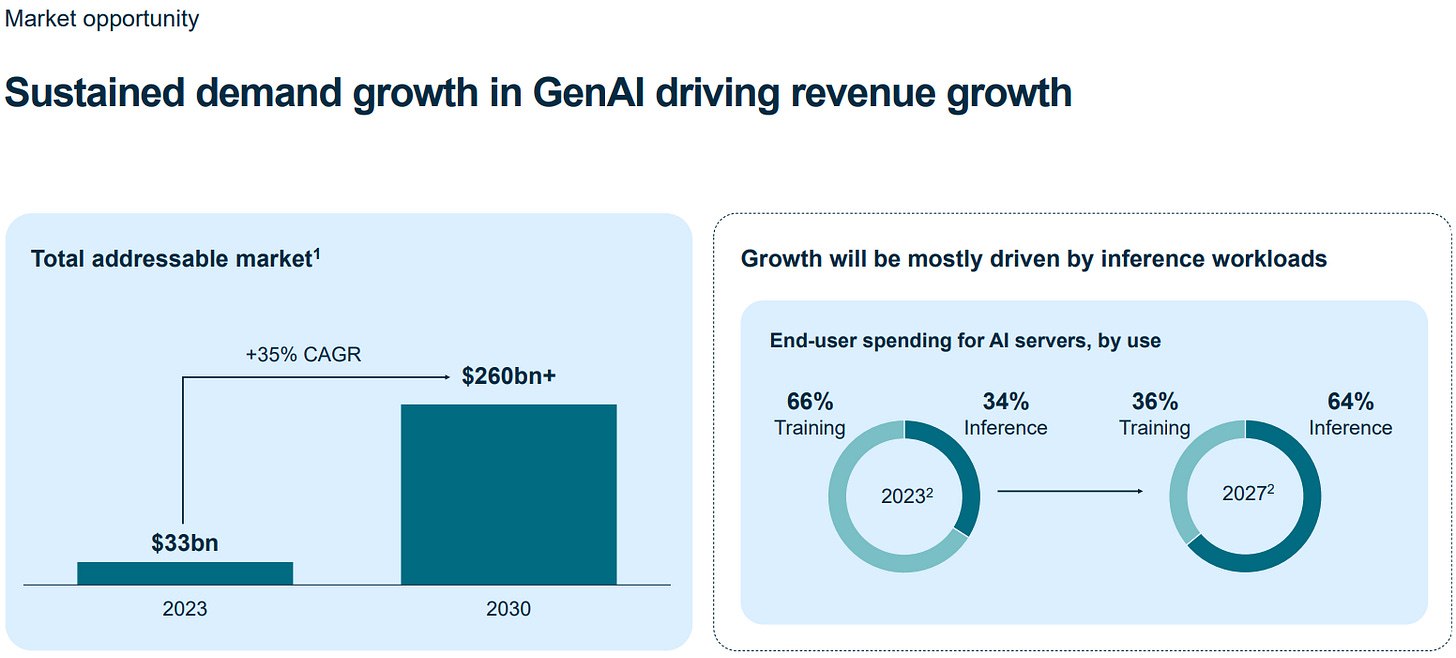

On the demand side, AI exploded after models proved their efficiency, confirming opportunities across various sectors. This triggered massive and rapidly growing demand for model training, first.

FLOPS - Floating Point Operations per Second, measure compute performance. This graph shows the amount of FLOPs used to train each model, with Gemini 1.0 Ultra the most compute-intensive, requiring 10^26 FLOPS for its training. Beyond the immense compute required, the number of models trained annually & their size have multiplied over the past decade, especially post-2020. This graph only include few of them.

Grok estimates it took around 57,000 TPU v4s during a full quarter to train Gemini 1.0 Ultra. Google has roughly 600,000 TPUs - all versions combined. These numbers aren’t precise but illustrate that training one model consumed 10% of Google’s internal compute capacity for a quarter, non stop. This was their most advanced model, but still is only one model.

This shows that compute demand for training has grown exponentially, as has the number of models being trained with more companies seeking to develop their models for specific purposes.

The second key point is that this graph covers only training, not inference. Training a model is like a human learning; inference is like a human acting. Training is useless without actions, and inference requires compute to convert training into actions with any kind of fomat - text, image, video, sound, physical action...

Inference is expected to grow much faster and become a larger market than training over the next few years, as models are deployed for real-world use cases serving hundreds of millions, probably billions, of users.

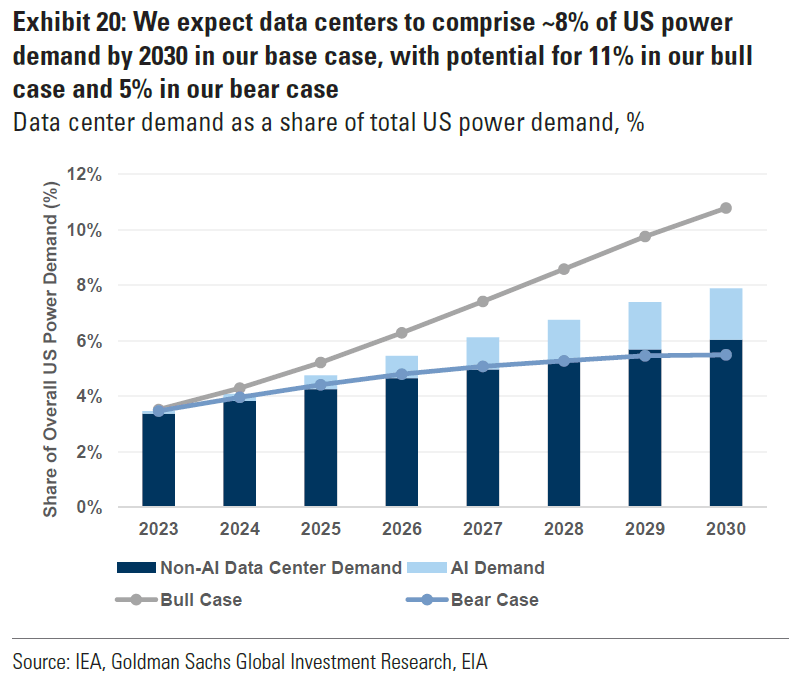

Meanwhile, supply remains constrained. Providing compute isn’t just about plugging GPUs into power sources. It requires infrastructures - datacenters & electrical sources, hardware - GPUs and all the necessary for an efficient networking, which takes time to manufacture, and engineering expertise to build, configure, and optimize datacenters for clients’ needs. This entire chain takes months, assuming the land is ready, but can take a year or more depending on energy sources and infrastructure.

The supply chain involves securing land, energy, hardware, building datacenters. We do not have enough physical datacenters and energy sources ready to be plugged in today, so this constraint will persist.

I have no idea how fast demand will grow, but recent years suggest it will grow fast, hence the need for new energy sources and infrastructures as others industries won’t reduce their consumption for datacenters to use it. We need a net growth in energy production, and this requires time to build new infrastructures.

Companies must tackle this issue before adding more compute. I’ll discuss this further in another investment thesis about nuclear energy.

There are many more arguments to illustrate compute’s growing demand, and more arguments to show that it’ll take time to get there, but we will focus on Nebius now. I hope I’ve clarified the current situation & why I believe supply constraints will persist for at least a few more quarters, likely years.

Business Model.

Having established the demand for Nebius’ services - for hyperscalers & neoclouds at large, let’s explore how they make money. It’s simple, which I appreciate: they rent compute.

While strategies vary, Nebius’ management works closely with clients with a business model that benefits all parties. As Roman Chernin said:

We have very opened discussion with our customers about their business model… No one wins if we overprice GPU usage and they in turn overprice their service to a point no one buys it.

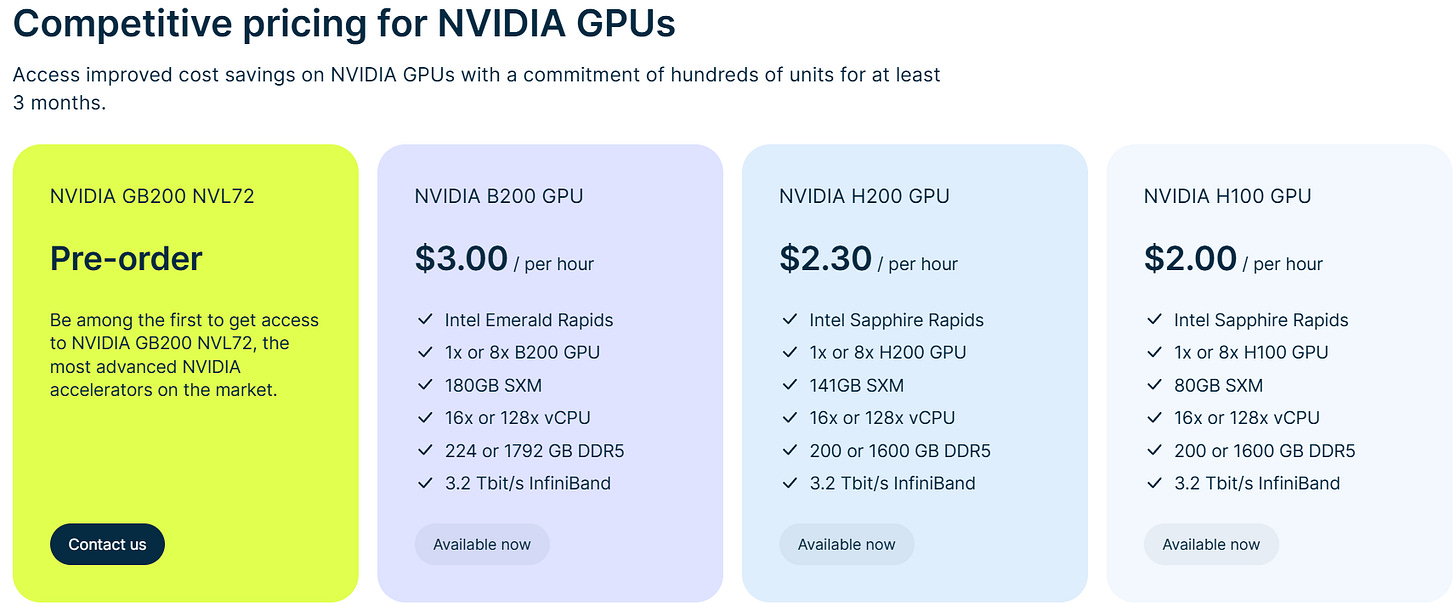

Focusing on Nebius, their business model is to rent GPUs based on their clients’ exact consumption rather than forcing contracts with fixed durations or minimum compute usage. This flexibility increases demand but also business risk - fixed contracts provide more stability. They also allow their users to plan GPU usage or to rent for a period. It isn’t only per hour, first come first serve, they have a wide range of possibilities but chose to start their offer from a very fexible model.

Users come, consume, pay per hour, and leave.

Nebius offers two platforms: AI Clouds and AI Studio.

AI Clouds rents GPU usage for training and inference. Nebius’ teams install, configure and maintain clusters tailored to clients’ needs, with pricing varying on customization. Clients can fine-tune GPU usage or prioritize workflows, etc. More customization leads to higher hourly rates. Everything is managed via APIs, allowing clients to rent GPUs without direct interaction with Nebius’ teams.

AI Studio operates similarly, with API-based configurations and hourly pricing based on usage and customization for AI models - for inference usage, not GPUs. They offer every open-source AI model, with different customization based on performance and use case (e.g., text-to-text or text-to-image). Like their GPU rental service, Nebius provides a wide range of advanced models to serve diverse clients. You can find the list and prices here.

https://nebius.com/prices-ai-studio

In both cases, clients can reserve compute with specific parameters for a set period, even on a pay-per-hour basis.

Here’s an example of AI Studio’s interface, with fine-tuning commands on the left and the configuration panel in the center - this is AI Studio playground. These two services form the largest portion of Nebius’ business

But they go a bit further for some clients.

Personalized configuration. As we will see just after, Nebius’ targets are enterprises, large clients with very specific needs - for both training and inference, requiring very specific configurations for long term partnerships, with reserved compute. This will be a more expensive service for more durable partnerships with enterprises running their commercialized service on Nebius’ platforms.

Customer Base.

Now that we know what Nebius does, let’s examine who they serve. Nebius targets three client types, with enterprises as their ultimate goal, aligning with our core belief that AI will create economic value and real-world services.

First, AI Startups. The natural starting point. Fast, risk-tolerant and eager to test new solutions. This allows Nebius to have real users testing their releases while building relationships with potential multibillion-dollar companies with massive compute needs. Even if those companies don’t scale, they could attest for Nebius’ service quality. As Roman said.

Everyone knows everyone.

Second, Hyperscalers. As we’ve seen, even hyperscalers lack compute, particularly specialized compute, so they outsource some demand to neoclouds. This isn’t ideal for Nebius as it doesn’t build long-term relationships or showcase their capabilities, but it’s still business.

Third, Enterprises. The goldmine of compute demand, long-term users with clear AI applications but no infrastructures to run models internally (unlike Meta, for example). Enterprises rely on Nebius’ inference capabilities to commercialize their services, the ideal long-term partners with clear and stable needs & consumption.

This is the game for the next five to ten years for us.

Nebius has already partnered with Shopify to run inference through Qwen’s model for personalized recommendations and buyer-seller matching, requiring AI for customer & merchant knowledge. For more details, if interested.

https://shopify.engineering/machine-learning-at-shopify

Nebius isn’t Shopify’s only compute provider, but success & competitive pricing with such partners could strengthen the partnership or lead to recommendations. Once again.

Everyone knows everyone.

We’ve covered a lot, but you still don’t know why Nebius is the best neocloud. Let’s explore that now.

Why Nebius?

In a few words, with details to follow.

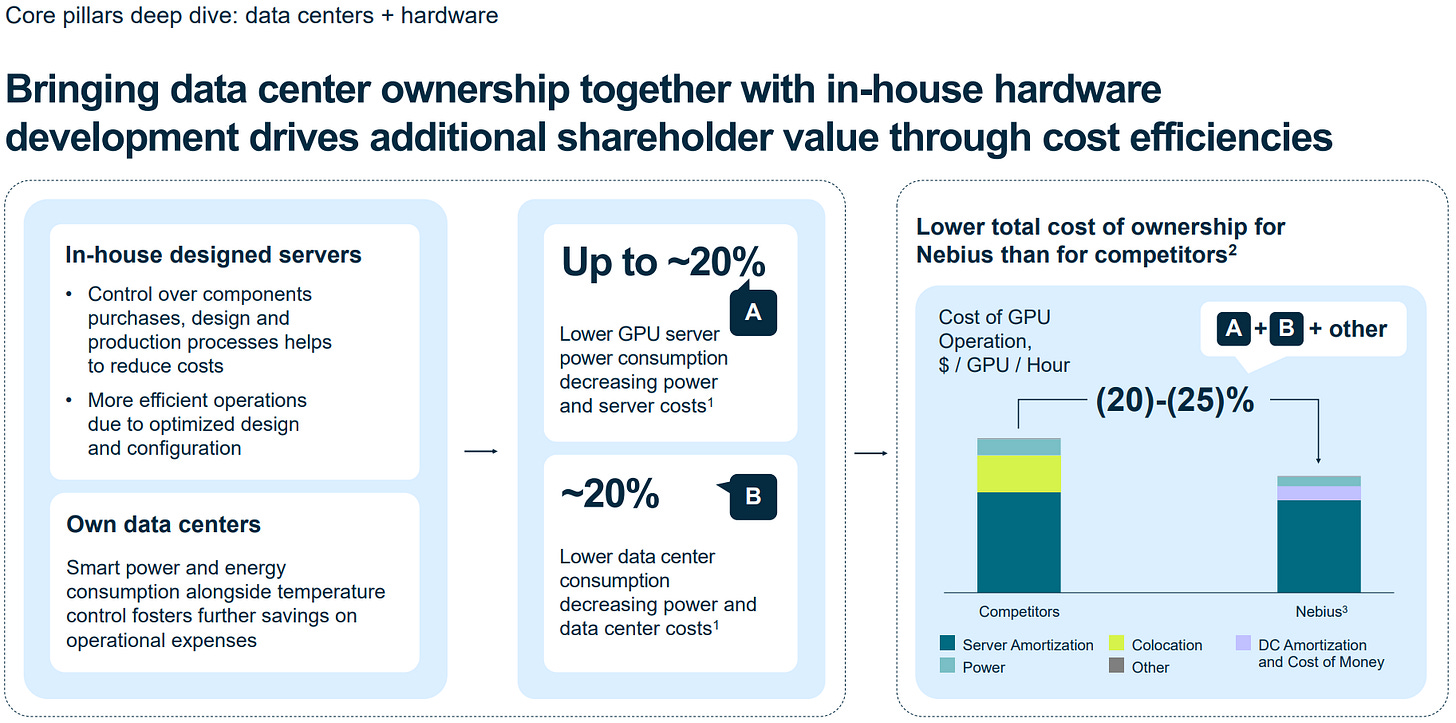

Nebius offers the best price-to-quality ratio due to its vertical integration of hardware and software, and its focus on efficient energy sources. In an industry where a second of delay can cost millions, equal performance at equivalent pricing attracts demand.

Some companies may stick with hyperscalers for convenience, even at slightly higher prices, and the actual supply constrains gives Nebius an opportunity to build relations and satify customers. It’s impossible to know how long this will last, but if Nebius continues to execute and provide an above average service, it’ll become a serious player in the compute market long-term.

Now let’s see what makes Nebius better.

Datacenter, Energy & Hardware.

Let’s go back to Mäntsälä, Finland, to illustrate Nebius’ optimization.

This datacenter leverages its geography to optimize costs. It uses external ambient air to cool the datacenter and reduce cooling needs, and transfers GPUs heat to the city, which uses it to warm households. An optimized and virtuous cycle. This obviously cannot be used in every geography, but it is the kind of engineering and partnerships that Nebius is looking for for its datacenters.

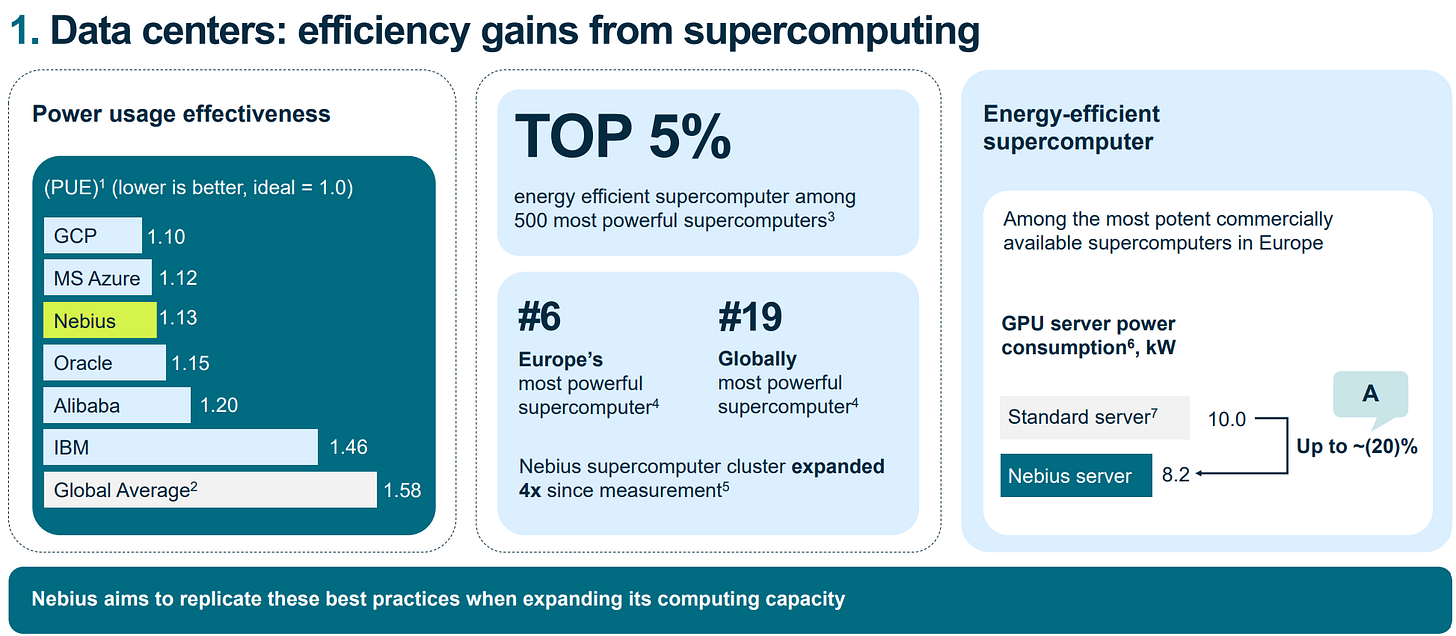

This isn’t Nebius’ only strength. Their legacy Yandex engineers excel in optimization, reducing hardwares’ energy consumption & costs. through datacenter design, tool-less hardware configurations, custom-built servers… Which result in up to 20% better energy efficiency than off-the-shelf hardware.

Combined with optimized datacenters, this allows Nebius to lower costs without sacrificing service quality.

As Nebius expands geographically, they won’t be able to replicate these datacenter optimization everywhere, but their hardware optimizations will happen everywhere, allowing Nebius to offer a competitive service and pricing.

Currently, Nebius has 220 MW of connected or usable power and plans to reach 1 GW of contracted power by the end of 2026 across Europe, U.S. and Israel, with potential expansion into Asia - a rapidly growing region in term of tech, if demand justifies it.

We follow the customers, everything we build will be justified by demand.

Small lauïs on the difference between connected and contracted power. Contracted power means energy and land are secured but infrastructure - datacenters & GPUs, may not be fully built. It means they will be ready to deploy if demand follows.

Securising energy sources is really important for the reasons shared above: we do not have enough built infrastructures at the moment and it takes time to build them. With contracts already set up, Nebius ensures itself to have those energy sources and will only invest the hardware if demand follows.

Not all their datacenters are owned outright; Paris, the U.K., Missouri and Iceland, are in colocation. All leverage Nebius’ optimized hardware, and some optimized energy sources, like Iceland’s geothermal-powered datacenter or the New Jersey datacenter, co-owned with DataOne.

At the core of the facility is an innovative approach to power generation. We’ll leverage behind-the-meter electricity and advanced energy technology to maximize sustainability while simultaneously strengthening operational reliability — exactly what AI innovators need for their workloads.

Nvidia’s Partnership.

Nvidia joined Nebius in 2024 during a funding round with an investment plus granting Nebius a prioritized access to their hardware, which was the most important part of my earlier bull case as Nebius would be among the first to offer Blackwell, attracting demand which cannot be met by other compute providers until supply catches up - still not the case as of August 2025.

This partnership was the deciding factor for many investors and a massive tailwind for the company, who relies entirely on Nvidia - with no plans to include AMD’s chips, and will continue to have early access to its newest hardwares.

Software.

Great service today requires optimized hardware and software, as leading hardware companies have shown these last years. Nebius’ AI Clouds and AI Studio platforms offer intuitive, self-service interfaces with APIs for GPU and model customization.

Nebius Studio simplifies even more application development for model users and app builders, by allowing developpers to play with different parameters in order to find the best set up for their models.

Nebius’ teams is focused on improving its services by upgrading models, adding fine-tuning options, onboarding new models, etc...

So… Why Nebius?

In brief, Nebius has optimized hardware in optimized datacenters and proposes user-friendly software, customizable, with above-average benchmark results at competitive prices. They own the full technology stack for training and inference as a service -pretty rare among neoclouds and hyperscalers who often rely on off-the-shelf components for hardware, datacenters or software.

This vertical integration enables Nebius to offer the best price-to-quality ratio in the market and could set them apart for years as catching up will take time for competition.

What we don't want is to be no name commodotized data center provider. We prefer not to just provide capacity to them but bring them as a managed service on top of our platform.

Here’s an example of pricing for Nvidia H100 hourly usage, at time of writting, without reservation nor commitment - as prices usually drop with this kind of options. I use those parameters to compare other providers to Nebius’ flexible service.

Data’s from Grok.

Data is clear in term of pricing. As for Nebius’ compute quality, it usually matches or exceeds other neoclouds & hyperscalers across most metrics, often ranking highest in peak capacity without specific model requirements. You can play and compare with other providers here.

https://artificialanalysis.ai/providers/nebius

We’ve now covered Nebius’ core business, but there is more to say about Nebius as a company as it owns two subsidiaries: Avride and TrippleTen, and owns stakes in Toloka and ClickHouse. We need to talk about those.

Subsidies & Equity.

Let’s start with the subsidiaries.

Avride.

Avride focuses on autonomous vehicles and their applications, already generating revenue at a small but rapidly growing scale.

Mobility. Avride develops autonomous vehicle systems equiped of 5 lidars, 4 radars, 11 cameras and a proprietary AI model, for mobility in rural areas, similar to Google’s Waymo.

Avride already has a partnership with Hyundai to integrate its system within their cars and a multi-years partnership with Uber for distribution. Testing is ongoing in Austin as of August 2025, with services planned for Austin and Dallas in the coming months, without specific dates.

I continue to believe Uber is an overlooked company and that distribution matters. But that’s another subject

Delivery. Avride developped some cute autonomous robots to deliver food, the part of their business which is already generating revenues with robots deployed in different geographies.

In neiborhouds in Austin, New Jersey and Dallas via Uber Eats.

In a few quarters in Tokyo via Rakuten.

Testing nationwide in South Korea.

Ohio’s State University.

For some numbers, those robots completed 80,000+ food deliveries in Ohio University with 112 robots deployed for 18 restaurants during the first semester of 2025.

In total, the fleet operates for 14 hours a day. Fridays tend to be the busiest. Our data shows a noticeable spike in orders at the end of the week. As a result, Fridays often set new delivery records - the latest being 1,389 orders in a single day.

To give you an idea of the business’ potential, who is already planning to expand to more geographies.

Here’s what the robots look like.

Pretty cool.

Yandex started to work on autonomy technologies in 2017 which explains why Nebius speed to ship products. Another unfair advantage.

TrippleTen.

TripleTen is an online education platform specializing in new technologies - Software Engineering, Data Science, QA Engineering, BI Analytics, AI, Cybersecurity… Organized in part-time bootcamps (4 to 10 months), self-paced and beginner-friendly, aimed to those who want to change career or learn new skills.

Courses include hands-on projects, tutoring and coaching, with an 85% employment rate within six months. Its business model relies on inscription fees.

Toloka.

Nebius owned Toloka until a few weeks ago when Bezos Expeditions led a fundraising of $72M, after which Nebius kept a majority economic stake in it but relinquished voting control.

Toloka provides data for AI developments. AI model are trained on massive data sets which every company cannot gather themselves, so they rely on third parties’ with a pretty strict overview on quality as models’ competences depend on it.

If Avride trains its autonomous driving system with Mario Kart videos… you can expect some damages.

Toloka has many sources to collect its data and proposes tools to treat it, to perfect it, annotate it, create datasets for training, fine tuning, evaluating any kind of model for any kind of use case - LLMs, image generating, physical AI. With an already solid client base with Amazon, Microsoft, Anthropic, Shopify, etc...

Nebius won’t register revenues from it but will profit from its growing valuation, and management already confirmed they could used it to fund Nebius’ core business. Another potential source of funding, once again, thanks to an unfair start.

ClickHouse.

Nebius owns a 28% stake in ClickHouse. Like for Toloka, management confirmed they could use this equity to fund the core business.

ClickHouse’s business is in real-time analytics and data warehousing/treatment. It provides high-performance treatment of massive databases allowing fast queries through a cloud-based service.

ClickHouse was built to offer real-time processing warehouse to enable interactive data visualizations and real-time analytics. To complement traditional warehouses' offline capabilities, ClickHouse optimizes around low latency and high interactivity across complex joins and large data aggregations.

In a world where artificial intelligence requires petabytes of data treated rapidly, any tool allowing engineering teams to rapidly access it can save thousands, millions of dollars per day by accelerating usually slow process.

The company is valued at $6.35B at time of writting, Nebius’ equity at $1.78B.

Financials.

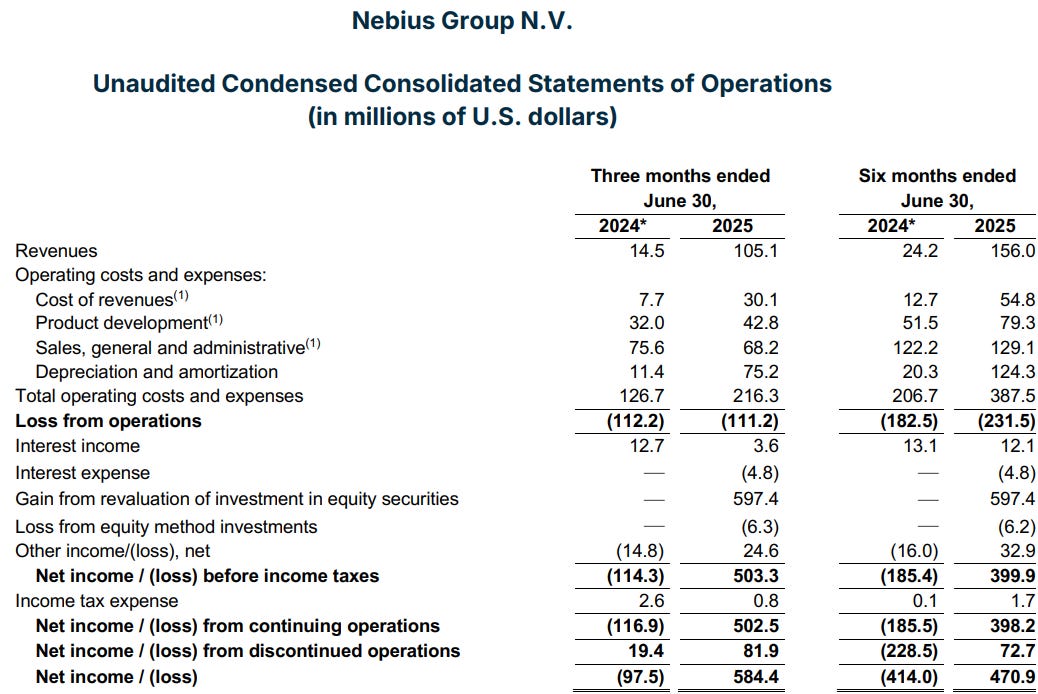

Nebius is a growth story, early in its journey after its separation from Yandex in 2024, so YoY comparisons are irrelevant as it compares different business models. So there isn’t much to say in terms of financials.

The important data to focus on is its sequential growth, with revenues growing from $51M Q1-25 to $105.1M in Q2-25, a 100% increase, driven by selling 100% of their compute capacity during the quarter. Once again, supply-constrained sector.

Short term, only growth matters. The rest is characteristic of a young company with high sales and G&A expenses to build awareness and unprofitability after removing equity investments - which should be as they are unrealised gains.

Nebius is planning to be EBITDA positive before end of year - for the last quarter not the entire year, which would be a large achievement considering the investments made in the business.

Few words on depreciation and amortization. Nebius’ depreciation policy for its GPUs spans four years, increasing the pressure on their income statement. Management shared that demand for their oldest versions could last longer - with actual demand for their A100 GPUs which are around 5 years old already.

Last word on Nebius’ latest financing round, done in June, raising $1B meant to fund datacenters’ expansion. The notes have two tranches, 2% coupons expiring in 2029 and 3% coupons expiring in 2031, with a conversion price around $51 and $64. This highlighted the confidence in the stock from investors back then as it traded at $36.

Valuation.

I usually do not share valuations in my investment thesis as data changes fast and I do quarterly valuations during earnings reviews. If you read those lines post Q3-25, you should look at my last earnings review, my valuation will be updated there.

At time of writing, Nebius trades at $16.5B of capitalisation. It owns equity in Toloka & ClickHouse and while the first one’s valuation is not disclosed, both combined should be worth north of $2B, the real value probably being a bit higher as both companies are growing fast in a very demanded sector.

Nebius core business’ guidance is $1B ARR by end of year 2025 which would translate to more in term of revenues FY26 as those ARR will be cumulated with new capacity and a growing demand during the year, hence revenues.

At $14B valuation minus equity, not including cash as most will be invested, we’d talk about 14x FY26 sales.

Excluding growth post $1B ARR, excluding Avride which is generating revenues & will continue to expand & scale around the world and excluding equity growth of Toloka and ClickHouse. For a company without debt except its convertible notes payable in 2029 at the earliest, and a path to profitability by then.

I do not believe 14x sales is expensive for a company growing triple digit, high double digits post 2026 - probably, involved in cutting edge business with a demand growing rapidly and probably for long, plus its equity stakes…

Conclusion.

This should give you the keys to understand Nebius from A to Z. A business adapted to its time as demand continues to ramp up and cannot be satisfied by actual supply, a competitive service against both hyperscalers & neoclouds thanks to an unfair start and a vertical integration pushed to its limits, giving the company equal capacities & quality for comparable, often lower, prices.

Nebius has everything to please in my opinion and I consider it to be one of the best AI hardware play on the market at time of writing. On competition, this write-up is about how Nebius is differenciating itself and how this differenciation could set it up for long term success in the sector. We’ll need to control global compute demand and concurence, but as long as the team execute, competition should not catch up.

The biggest threat to Nebius’ business would be a ceiling in AI compute demand or an overall industry shut down as no company finds a way to monetize it. Or such an improvement in compute optimization that overall compute demand could be met by a few actors - probably hyperscalers. As I shared at the beginning, you have to believe that AI is real and that optimization is the key factor to success in the sector.

And as I shared through this write up, this is what I believe and believe that Nebius could be the differenciated neocloud. I am really bullish on the company and own both shares and option calls at time of writing.

Great write-up, thanks! However, I think one important piece of the puzzle is missing - unit economics. How much CAPEX NBIS has to deploy to construct 1 MW of GPU, what is its useful life and how it compares to avg revenue per MW? What is the utilization required to break-even assuming straight line depreciation?

The data is pretty tough to put together as it depends how you consider Yandex investments. We don't know how much the company put, we only know how much nebius invest post-separation and will have clearer data later in time.

For now... All we can do is assume as they run their service on their finish data center mostly, a Yandex construction.