The Perfect AI Infrastructure Portfolio

Understanding the AI Chain and Its Next-Decade Winners

This is one of the write-ups I’m the most excited about. As AI continues to shape our world and will do for the decades to come, learning about it, the companies involved, their products and how they connect is fascinating. Being part of this revolution, even just as an investor, is really exciting.

To be blunt: this write-up won’t reveal any secret investing strategy to turn you into a billionaire overnight. It’s not meant to surprise you. Its purpose is to map out the AI chain and explain why its current leaders are the ones we should bet on for the long term. Why?

Because winners keep winning, and that’s what you want to do too.

There’s no reason to overcomplicate things. Markets are designed to help winners win more. Our job is to take advantage of that & occasionally to find the next big winner, a much harder feat.

That being said, everyone can tell you Nvidia is a wonderful company that you should own. I’ll do that too, but I’ll also go further. I will give you the keys to understand why Nvidia is a winner in the simplest possible way, so you can build your own convictions. This is what I do with my investment thesis write-ups.

Today is about the entire infrastructure AI chain, walking through it & highlighting the key companies within it, the ones that I consider tomorrow’s winners. And sharing my strategy to make money from those; most lose on the markets even when investing in winners. It isn’t enough to understand companies’ fundamentals.

Investing is not about brain but about guts and planning. You don’t need to be the smartest kid in the room, you need to have a system and follow it, in green times & red times. This is where things get hard.

That’s the focus of this Substack: above-average returns. You don’t see me just write that Company X or Y is “great”, everyone knows that. You see me write about why in the first step, without any regards to how to invest. And how in a second step, with a focus on returns and how to maximize them. Because the why and the how are two completely different things.

Conviction comes from knowledge, and your system will turn those convictions into above average returns. This write-up is meant to focus on both the why & the how.

The Perfect AI Infrastructure Portfolio.

Before we start, I’ll begin with the how. You’ll find everything you need for the why in the next paragraphs.

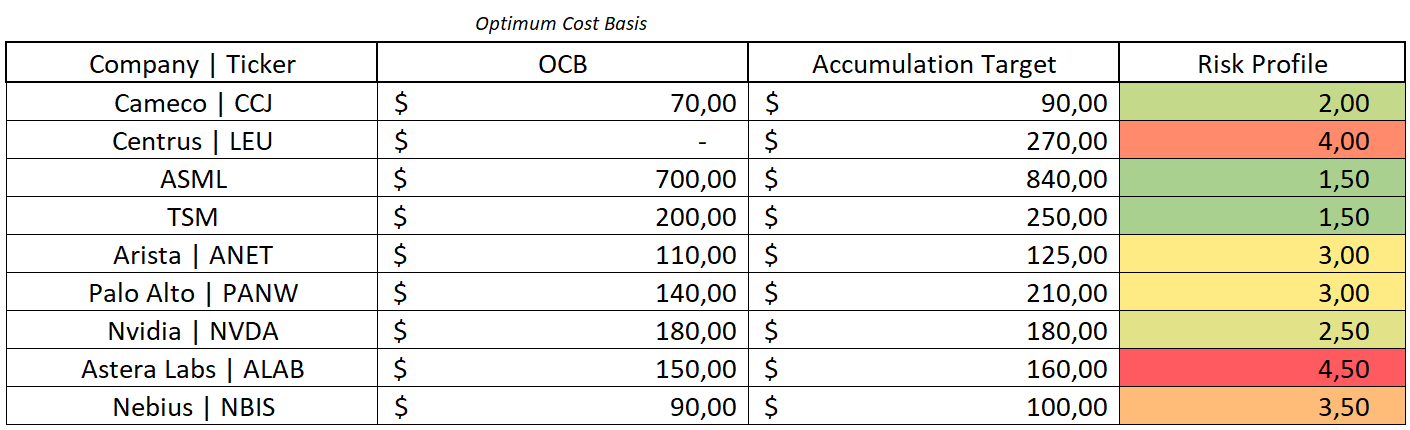

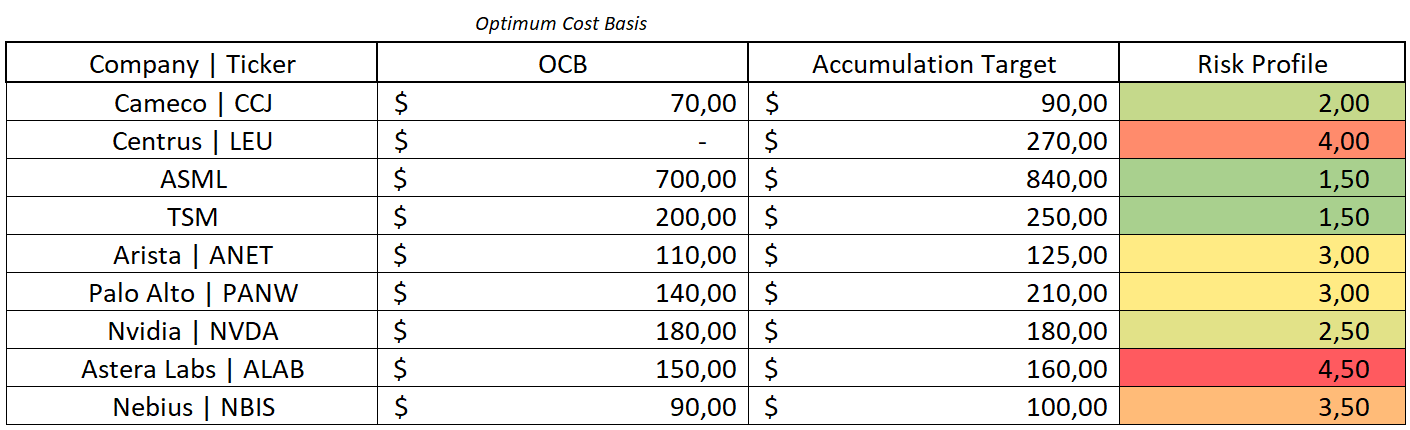

Here are the prices at which I would consider building a position for each of the assets presented below with a risk score to highlight the uncertainty around each thesis. The higher the risk, the higher the potential rewards.

These numbers are based on my own vision of the market, companies & price action. You might have different ones - and that’s fine. I would hold all those names at those prices for the next decade without any worries as AI is here to stay and those are the ones powering this revolution.

Energy | The Beginning of Everything.

Nothing happens without it. It’s what powers every industry, device, and datacenter. In our case, it takes the form of electricity but producing electricity requires another fuel.

AI is a revolution, and its electricity consumption surpasses anything we’ve seen in the tech world. The rapid expansion of datacenters over the last few years has already left us short on supply and slightly pushed prices higher. Additional capacity will be needed to meet the massive and growing demand from AI infrastructure.

The real debate is which source of energy will dominate. For many, the answer is nuclear; small reactors whose sole purpose is to feed datacenters. Some will be owned directly by tech giants, others will be built by partners through long-term contracts.

This seems like the next logical step. Alternatives are either unpractical or significantly more expensive. Having full control of your own energy source is the best approach, even if it comes with other challenges.

For me, the best way to play this vertical is uranium.

It’s easy to imagine, a few years from now, most datacenters equipped with their own private, stable, and reliable energy source: small nuclear reactors sitting right next to them. And those will consume tons of uranium every year to produce the required electricity.

Within those datacenters, a lot will happen.

Semiconductors | The Tech Foundation.

But before going inside the datacenter, we need to talk about the foundation of modern technologies: semiconductors.

Those tiny parts are embedded in every piece of hardware and control how current flows, or doesn’t. Just like energy, there is no datacenter & no technology, without semiconductors.

Two companies reign supreme in this space: ASML and TSMC. Together, they form the backbone of global chip manufacturing and enable the rest of the AI hardware ecosystem to exist.

In this sector, efficiency wins; and efficiency always comes from the best. Those two are the best in their respective fields.

Providing energy is step one. Providing semiconductors is step two. Filling datacenters with hardware powered by those semicondustors is step three.

Networking | Connecting the Infrastructure.

The first step in a datacenter is networking the hardware that interconnects everything together. You need every component within a datacenter to communicate seamlessly and at high speed. That’s what networking hardware ensures happens.

It might sound simple to connect devices, but large-scale infrastructures are far more complex. They require aggregators; hardware whose sole purpose is to interconnect everything efficiently.

These are called switches, and Arista Networks currently holds the efficiency crown for AI workloads.

To be fully transparent, we learned yesterday that Meta, one of Arista’s largest clients, has started purchasing Nvidia’s networking devices. This could represent a significant threat to Arista’s business if it were to become a long-term trend - although this portfolio is designed to hold both.

This isn’t a thesis breaker today, but it’s a variable to monitor if you plan to buy/hold Arista. There is a risk.

Security | Protecting the Infrastructure.

Once everything is connected, the next challenge is security.

It would be a disaster to have terabytes of training data or sensitive user information stolen, applications corrupted, or services disrupted. Just as you lock your house and install alarms, datacenter networks must be protected - even more in the AI era.

There are countless cybersecurity providers, but one of the most complete and rapidly growing is Palo Alto Networks, offering everything from firewalls to advanced AI model and application security.

Once again: in this sector, efficiency wins, and a security provider capable of providing different security layers interconnected with each other and centralised within a single system earns a lot of points.

Compute | The Heart of AI.

We now have a functioning and secure datacenter, but it isn’t AI-focused. AI requires compute, and compute means GPUs - highly specialized hardware built from unique semicondustors to process massive amounts of data in parallel.

No surprise here: Nvidia is the undisputed leader. Its GPUs are present in every AI datacenter worldwide and form the backbone of modern AI.

There are more GPUs providers but Nvidia’s infrastructures are above competition in term of… You guessed it: efficiency, once again.

But even the best can be improved. Nvidia can’t focus on every micro-component of its products - it tried to, so it partners with other companies to enhance specific aspects of performance.

That’s where Astera Labs comes in, providing specialized components integrated within GPUs to optimize control, performance & efficiency, pushing performances even higher.

Astera’s semiconductors are about improving excellence and earning those few more points of efficiency which makes perfection. Many other companies are working on reaching perfection and Astera received lots of praises because of its live demos, but the company is very young in a rapidly evolving sector.

Hyperscalers and Neoclouds | Leveraging Infrastructure.

All these components together form the most advanced AI datacenter possible today, with the most efficient compute. The sector evolves fast; the landscape could look very different in a few years but right now, these names are the strongest in their verticals.

Once datacenters are built, they must be operated. That’s where hyperscalers and neocloud providers come in, companies that turn hardware into usable compute.

You already know the giants - Google, Microsoft, Amazon, but one emerging name worth mentioning is Nebius, which followed all the steps above and built what may be the most efficient datacenters of its size; with above-benchmark performances for very competitive pricings, capable to compete with hyperscalers.

Competition is fierce and this sector requires lots of risk-taking. It won’t be a winner takes all market and we know hyperscalers will stay. But investing is also about occasionally finding the next big winner, a much harder feat.

Bonus | From Compute to Services.

With datacenters built and running, the final layer is using that compute to create products and services consumers pay for.

If you’ve followed me for a while, you know I’m bullish on Palantir, Meta, Duolingo, Tesla, Adobe, and few more. You can find content about each on my Substack. But today’s goal is to stay focused on the infrastructure, the companies building the physical foundation of AI for the next decade.

Final Thoughts | Building Coherence.

Investment portfolios need coherence to maximize returns. That comes from buying companies with synergy, players that benefit from each other’s growth. Betting on the infrastructure build-up of AI seems like a strong long-term play, one the market has been on it since a few years now as all those names have yielded good returns. Which doesn’t mean they won’t anymore; winners keep on winning.

The more complex question is how do we yield returns from them, from today. My answer remains by following our system, mine is to focus on strong companies with positive narratives at attractive prices, my convictions are built and I am now waiting for the opportunities to come - they will.

Once again, here are my targets.

This reflects my view for the next decade & a clear focus on the leader of each vertical that makes AI possible. From uranium to semiconductors, networking, security, compute, and hyperscalers.

AI is just getting started. The market might not look like it, but it is only the begining.

Really good write up on the supply chain of the AI infrastructure! It would be really cool to have a picture that includes tope 3 of each domain.